Build and deliver a rigorous feedback collection system in weeks, not years. Learn step-by-step guidelines, tools, and real-world examples—plus how Sopact Sense makes the whole process AI-ready.

Data teams spend the bulk of their day fixing silos, typos, and duplicates instead of generating insights.

Data teams spend the bulk of their day fixing silos, typos, and duplicates instead of generating insights.

Hard to coordinate design, data entry, and stakeholder input across departments, leading to inefficiencies and silos.

Open-ended feedback, documents, images, and video sit unused—impossible to analyze at scale.

Feedback collection used to mean handing out surveys and waiting. You’d gather responses, copy them into a spreadsheet, and eventually produce a report that felt outdated the moment it was finished.

Today, that process can’t keep up. Teams need to learn in real time. Stakeholders expect their voices to matter right away.

Modern feedback collection is no longer about forms; it’s about flow—clean, continuous streams of input that stay connected, analyzed, and ready for action.

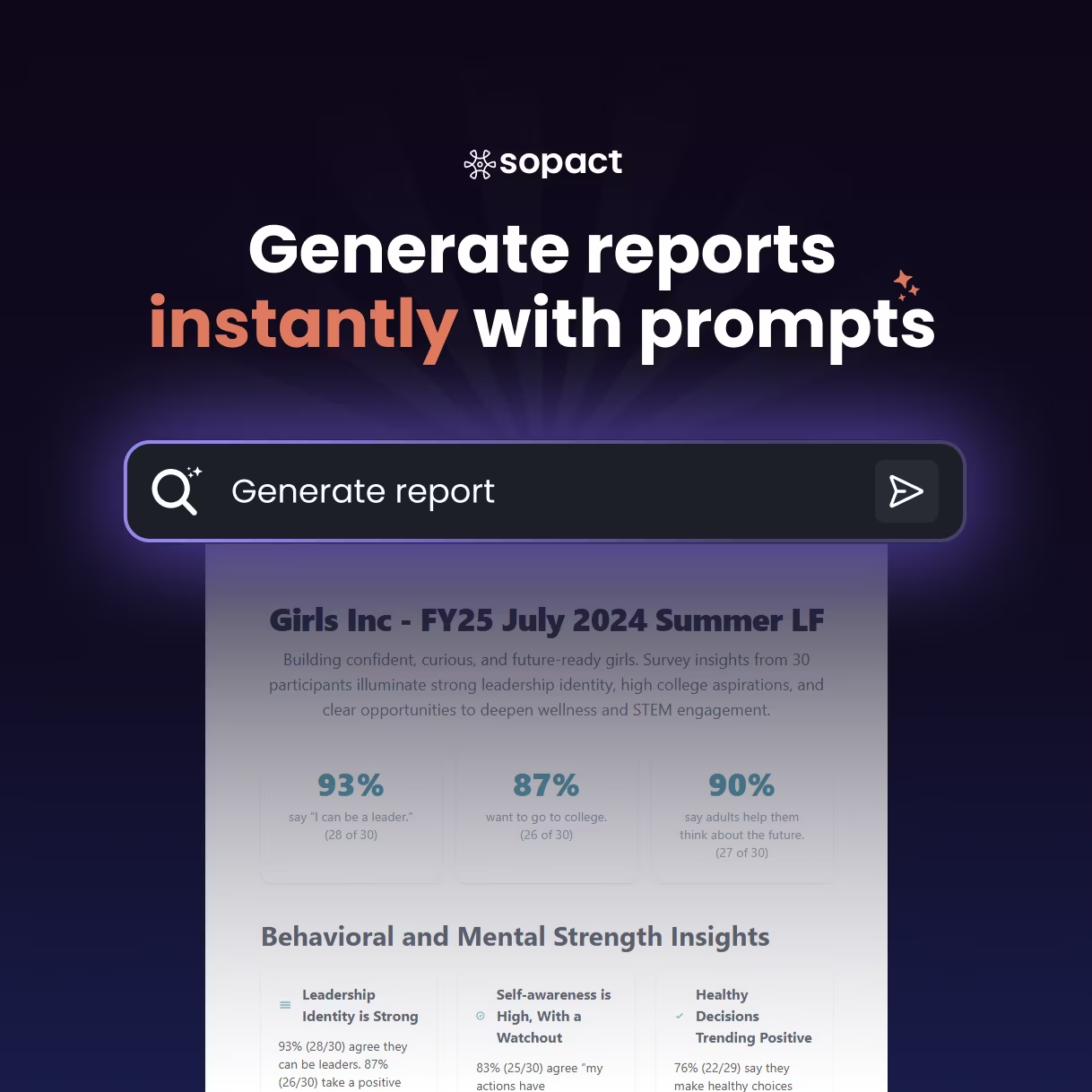

When organizations centralize their data and make it “AI-ready,” feedback stops being a task and becomes a source of learning.

This article explains what AI-ready feedback collection really means, why fragmentation kills insight, and how clean, centralized systems—like Sopact’s integrated approach—help teams move from data chaos to clarity.

Every organization collects feedback, but few manage to use it effectively. The reason isn’t lack of effort; it’s the tools.

Typical setups rely on:

Each tool works in isolation. Together, they create confusion.

According to industry research, more than 80 percent of organizations experience data fragmentation when juggling multiple feedback tools. Analysts spend up to 80 percent of their time cleaning and merging data before they can even start learning from it.

This delay matters. By the time the results are ready, the people who gave feedback have moved on—and the opportunity to adapt is lost.

Traditional feedback collection fails because it was designed for reporting, not learning.

Centralization isn’t just putting all your data in one folder. It’s building a single, clean pipeline where every response, document, and comment connects to the right stakeholder automatically.

In a centralized system:

The effect is simple but powerful: one version of the truth.

Instead of comparing five spreadsheets or reconciling columns, teams can view the complete story—scores and stories, side by side. Leaders can spot trends instantly, and frontline staff can respond faster because they trust the data.

Sopact’s model builds this workflow directly into its platform, but the principle applies anywhere: clean data at the source + continuous collection = smarter decisions.

Artificial intelligence isn’t magic. It’s pattern recognition. But patterns only make sense when the data feeding them is organized and complete.

An AI-ready feedback system means:

Once data meets these conditions, AI can help uncover insights instantly—highlighting common themes, sentiment shifts, or causal relationships between metrics and experiences.

For example, imagine collecting hundreds of participant reflections after a training program. Instead of reading them manually, AI can summarize recurring ideas (“peer support,” “confidence growth”) and connect those patterns with performance scores.

This isn’t replacing human evaluation; it’s amplifying human learning. The team still interprets meaning and decides action, but AI reduces the time between listening and understanding from months to minutes.

Numbers show progress; narratives show purpose.

Separating them weakens both.

When quantitative scores and qualitative stories live together, each explains the other.

Example 1 — Workforce Training:

Completion rate = 92 percent, but interviews reveal that flexible scheduling—not course content—drove retention.

Example 2 — Employee Engagement:

Satisfaction score = 4.3 / 5, yet open-ended responses highlight poor communication between departments.

Example 3 — Scholarship Program:

High academic outcomes, but essays mention emotional burnout.

By combining metrics and stories in one continuous system, teams see the whole picture and act with empathy, not just efficiency.

In disconnected setups, every new feedback cycle feels like starting over. Teams chase missing files, merge different survey versions, and lose momentum.

A centralized feedback collection system eliminates that reset.

All responses—quantitative or qualitative—feed directly into one clean structure.

The benefits are immediate:

Centralized feedback collection transforms feedback from a reactive process into an active management tool.

Sopact’s methodology—though powered by technology—is rooted in these same habits. It unifies inputs from any format, validates them automatically, and makes data ready for analysis at the moment of entry.

This is where “AI-ready” begins: data so clean and connected that systems can learn continuously without human rework.

An AI-ready feedback system is simply one where every new response teaches the organization something new—immediately.

Here’s what that looks like in practice:

Because the data is structured and unified, AI can detect subtle patterns—who is improving fastest, what barriers persist, where sentiment shifts.

Teams no longer wait for analysts to compile results. They can discuss findings in the same week, make small changes, and see new outcomes by the next cycle.

This is continuous learning in action.

The gap between legacy feedback tools and modern, AI-ready systems is widening fast.

Traditional tools were built for snapshots — surveys that capture opinions once or twice a year. Centralized systems are built for movement — learning that happens daily as new data flows in.

Centralization does more than speed things up.

It builds trust—everyone sees the same data, and everyone can trace how decisions are made. When information moves in one clean pipeline, accountability follows naturally.

Continuous learning isn’t a project—it’s a practice.

Organizations that treat feedback as an ongoing loop unlock three distinct advantages:

In centralized systems, each feedback cycle strengthens the next. Every new response feeds analysis immediately; every insight triggers the next question.

This rhythm turns data collection into a living conversation—between participants, staff, and leadership—where improvement never stops.

“AI-ready” doesn’t mean machines taking over decision-making. It means humans finally having the bandwidth to do their best thinking.

By automating tedious steps—tagging themes, summarizing essays, merging duplicates—AI frees teams to focus on interpretation and empathy.

That human context remains essential. Algorithms can spot that “flexible scheduling” appears in many comments; only humans can decide what flexibility means for a specific community.

The best systems combine machine efficiency with human judgment, creating a workflow that’s fast and thoughtful.

No insight is better than the data beneath it.

If intake forms allow typos, duplicates, or missing context, AI will only amplify the noise.

That’s why clean-at-source design—validated fields, unique IDs, and clear workflows—is the cornerstone of reliable feedback collection.

When data is trustworthy from the start, every downstream step improves:

reports generate faster, comparisons stay accurate, and teams spend time on action instead of correction.

Clean data is quiet power—it keeps learning honest.

The impact compounds. What once required months of coordination now unfolds as a steady rhythm of learning.

The future of feedback collection isn’t about gathering more opinions—it’s about organizing them better.

When data is centralized, clean, and AI-ready, organizations don’t just measure—they evolve.

They move from reactive surveys to proactive learning. They turn fragmented voices into shared understanding.

The next decade will belong to organizations that treat feedback as a continuous ecosystem rather than an isolated event.

Centralized feedback systems, like those built on Sopact’s clean-at-source philosophy, already show what’s possible:

Feedback collection has finally caught up with the pace of change.

Clean data is the foundation, AI is the accelerator, and continuous learning is the destination.

When your feedback is centralized and AI-ready, improvement is no longer a guessing game—it’s the natural next step.