New webinar on 3rd March 2026 | 9:00 am PT

In this webinar, discover how Sopact Sense revolutionizes data collection and analysis.

AI-driven award management software cuts review time 75% for scholarships, grants, competitions.

Author: Unmesh Sheth Last Updated: February 2026

Award Programs Deserve Better Than Glorified Form Builders

Transform award programs from paper-pushing exercises into evidence-backed impact engines—where clean data, AI-assisted evaluation, and lifecycle tracking turn months-long cycles into days.

Award platforms still treat selection day as the finish line. But reviewers drown in 20-page PDFs, bias hides in inconsistent rubrics, and evidence vanishes after the ceremony—leaving boards asking "what changed?"

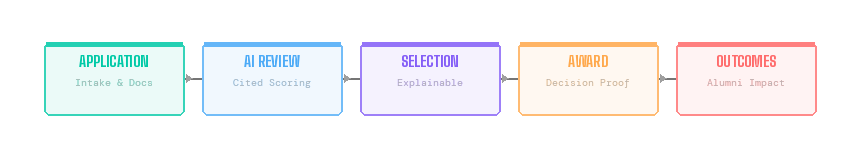

It instantly shows the reader the full journey: APPLICATION → AI REVIEW → SELECTION → AWARD → OUTCOMES, all connected by one unique ID. This is the "what we do differently" visual.

Award management software was designed to solve inbox chaos—routing forms, assigning reviewers, collecting scores. That worked when the bar was "process 5,000 applications without breaking email." Today's standard is higher: explainable decisions, auditable outcomes, and proof that resources drove change.

The problem starts at collection. Organizations pull data from three sources — documents (pitch decks, essays, references), interviews (founder calls, panel notes), and surveys (reviewer rubrics, stakeholder feedback) — but none of it connects. Different spreadsheets, different formats, no shared ID. By the time review starts, 80% of the work is cleanup, not judgment.

Clean-at-source data collection changes the math. Unique IDs assigned at intake. De-duplication on entry. Every document, interview transcript, and survey response linked to one record from day one. No reconciliation. No version confusion. No cleanup tax.

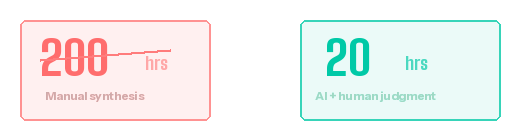

Then AI does what reviewers can't scale: reads applications, transcripts, and references like an experienced evaluator — extracting themes, proposing rubric-aligned scores, and citing exact passages. Uncertainty gets routed to human judgment. Obvious cases advance with full citations. The result?

200 hours of manual synthesis compressed into 20 hours of decision-making. Not by cutting corners — by routing the right work to the right layer. AI handles extraction and scoring. Humans handle edge cases and calibration. Every decision ships with sentence-level proof that survives board scrutiny.

This isn't about replacing human judgment. It's about building a system where selection criteria reference page 7, paragraph 3 of an essay — where scoring patterns flag geographic bias in real time — and where three-year alumni outcomes link back to intake narratives. Award programs become continuous learning engines, not annual ceremonies.

Award management software is a platform that centralizes applications, evaluation workflows, judging, scoring, and decisions for scholarships, grants, competitions, fellowships, and recognition programs — automating the complete lifecycle from nomination through post-award outcome tracking.

Unlike generic form tools (Google Forms, SurveyMonkey) that stop at data collection, or project management tools repurposed for awards administration, dedicated award management software handles what happens after someone submits an entry: routing applications to qualified judges, applying consistent scoring rubrics at scale, analyzing qualitative narratives alongside quantitative criteria, and generating decision-ready reports for committees and boards.

The best awards management systems today are AI-native — meaning artificial intelligence isn't a premium add-on but woven into every step of the evaluation process. This matters because the highest-value part of any award application — the essay, the project narrative, the impact statement — has historically been the hardest to evaluate consistently at scale.

Award management software serves any organization that selects recipients based on competitive applications where fairness, consistency, and evidence matter.

Scholarship committees at foundations and universities managing 500+ applications across financial need, academic merit, leadership, and essay quality — needing structured evaluation frameworks that maintain consistency across large reviewer panels.

Grant review panels at foundations and government agencies processing multi-page proposals, matching them to reviewers with relevant expertise, and reporting on funding decisions with evidence that survives audit.

Innovation and recognition award programs handling entries for industry awards, employee recognition, community impact awards, and innovation challenges — where judging criteria span qualitative narratives and quantitative evidence.

Fellowship selection committees evaluating researchers, artists, or community leaders through multi-stage processes combining written applications, work samples, reference letters, and interviews.

CSR and corporate giving teams running employee scholarships, community grants, volunteer awards, and social innovation competitions — needing a single awards management platform instead of separate tools per program.

Most organizations manage awards with a patchwork of tools — perhaps a dedicated awards management system like Evalato, Award Force, or OpenWater for intake and judging, combined with spreadsheets for scoring consolidation and email for judge coordination. These platforms handle the logistics well. They leave three critical challenges unaddressed.

Applications arrive in one system. Supporting documents get uploaded to a shared drive. Judge scores live in spreadsheets. Post-award compliance reports come through email. When the board asks "how did last year's recipients perform?", nobody can connect selection evidence to outcome data without weeks of manual reconciliation.

Organizations report spending 40+ hours per award cycle just reconciling data — before any evaluation happens. Every handoff between tools introduces errors. Every export loses context. Five years of award data, zero institutional learning about which selection criteria actually predicted success.

The fragmentation compounds for organizations running multiple award programs. Each program creates its own data silo. The same applicant across your scholarship and your innovation award exists as two separate people in two separate databases. Without persistent participant identities, you can't build the longitudinal evidence that transforms award programs from annual ceremonies into continuous learning engines.

Three judges score the same application: 8.5, 6.0, 9.5. What accounts for the 3.5-point spread? Different interpretations of "innovation potential." Different energy levels — morning judges score differently than afternoon judges. Different expectations that drift over time: week one scores average 7.2, week three scores average 5.8, for identical quality.

Traditional awards management platforms can't detect this drift. By the time you discover scoring inconsistency, decisions are finalized and bias is baked in. For programs making equity-sensitive decisions — scholarship selections, community grants, diversity awards — this isn't a minor process issue. It's a structural failure in fairness that no amount of annual judge training can fix.

Most award management software treats the selection decision as the end of the process. Forms collected, judges scored, winners announced — done. But the most important question — "did our selections actually drive the outcomes we intended?" — goes permanently unanswered.

Award programs that can't connect intake evidence to post-award results produce vague impact claims: "We funded 200 scholars" without knowing graduation rates. "We recognized 50 innovators" without tracking whether innovations scaled. "We awarded 30 community grants" without measuring community-level change.

This isn't just a reporting problem. Without outcome data feeding back into selection criteria, every award cycle starts from scratch with the same rubrics, the same questions, and the same guesswork about what actually matters. Organizations accumulate years of application data but zero evidence about which rubric dimensions predict real-world success.

Sopact Sense approaches award management as a continuous intelligence system — not a form builder with judging features bolted on. The platform integrates three capabilities that traditional award management systems treat as separate problems: clean data capture, AI-powered evaluation with citations, and lifecycle outcome tracking.

Every data quality problem in awards management traces back to a single architectural failure: applicants don't have persistent identities in the system.

Sopact Sense solves this at the architecture level. Contacts create unique IDs for every applicant — like a lightweight CRM built into the award management platform. Every form submission, document upload, judge score, and communication links back to that single identity.

When Maria applies for your scholarship and your innovation award, the system recognizes her automatically. Her demographic data, academic records, and application materials flow across programs without re-entry. If she needs to correct an error or upload a missing document, she receives a unique link that updates her existing record — no duplicate entries, no data reconciliation.

This architecture eliminates the 40+ hours organizations typically spend on data cleanup per award cycle. It also enables what traditional award software can't: lifecycle tracking. Contact IDs persist across years, so you can correlate application data with outcomes — which rubric dimensions actually predicted success? — and improve your selection criteria with evidence rather than intuition.

This is where Sopact Sense fundamentally differs from every other award management software on the market.

The Intelligent Suite — four AI analysis layers working together — transforms how organizations evaluate award applications:

Intelligent Cell reads applications like an experienced reviewer — not just extracting keywords but understanding document structure, tables, narrative flow, and evidence density. Upload a 20-page grant proposal, a scholarship essay, a project narrative, or reference letters, and Intelligent Cell extracts rubric-aligned themes with sentence-level citations. Every proposed score links back to the exact paragraph that supports it.

This is where the time savings become dramatic. A judge who reads 500 applications at 15 minutes each spends 125 hours. With Intelligent Cell pre-scoring every application and extracting key evidence with citations, judges verify AI analysis in 5 minutes instead of reading from scratch — compressing 200 hours of synthesis into 20 hours of decision-making.

Intelligent Row generates complete applicant summaries — concise briefs that combine essay insights, budget analysis, reference letter evidence, and rubric scores into a single decision-ready profile. Judges see comparable evidence instead of wading through 20-page PDFs.

Intelligent Column compares patterns across all applications in a dimension. How does the entire applicant pool score on innovation potential? Where do the strongest candidates cluster by geography or program area? Which rubric criteria produce the widest variance between judges? Column-level analysis reveals patterns invisible in application-by-application review — including scoring disparities by demographic segment.

Intelligent Grid creates decision-ready reports from the full dataset. Ask in plain English: "Compare the top 30 scholarship applicants across academic merit, financial need, and essay quality with supporting quotes from their narratives." Get a formatted report with charts, evidence, and exportable data in minutes — not the days of manual compilation your team currently spends.

The critical differentiator: every score ships with citations. When a board member asks "why this candidate?", you don't search through files retroactively — you click from the dashboard to the exact sentence that justified the decision. This is governance-grade explainability, not post-hoc storytelling.

Traditional award management treats bias as a training problem. Sopact Sense treats it as a measurement problem that requires continuous calibration, not annual workshops.

Intelligent Row applies identical evaluation rubrics to every application with anchor-based scoring — adjectives like "strong impact" are replaced with banded examples that AI and humans both reference. Define what "exceptional leadership" means once with concrete evidence examples, and AI evaluates all 500 applications against that standard without drift.

The system flags outlier scores in real time: "Judge A scored this application 9.5, but AI analysis suggests 7.0 based on evidence density. Recommend calibration." Intelligent Column detects segment-level disparities: "Rural applicants scored 12% lower on average — review for geographic bias before finalizing decisions."

Disagreement sampling surfaces cases where judges or AI diverge, triggering mid-cycle anchor refinement rather than post-cycle regret. Every fairness adjustment — prompt tweaks, anchor updates, panel rebalancing — is logged in a brief changelog that survives board scrutiny.

The same architectural principles — clean data, AI evaluation with citations, lifecycle tracking — apply across every award-based program. Here's how the capabilities map to specific use cases.

Foundations and universities use Sopact Sense to evaluate scholarship applications with AI-assisted holistic review. Intelligent Cell extracts financial need indicators, academic merit evidence, and leadership themes from essays — applying identical criteria to every application with sentence-level citations. Contacts track applicants across multiple years and programs, enabling longitudinal outcome tracking that connects selection decisions to graduation rates and career trajectories.

Grantmakers use Sopact Sense to process multi-page proposals with consistent evaluation rubrics. Intelligent Cell analyzes project methodology, budget feasibility, and outcome measurement plans. Intelligent Grid generates funder reports combining quantitative metrics with qualitative narrative evidence. For organizations running multiple funding streams, Contacts link the same grantee across programs and years — creating the lifecycle continuity needed to answer "did our funding decisions drive the outcomes we intended?"

Industry awards, employee recognition programs, and innovation challenges use Intelligent Cell to analyze entries that span qualitative narratives and quantitative evidence — product specifications, market data, impact measurements, creative portfolios. Multi-stage judging workflows route entries through screening, deep evaluation, and finalist selection with AI-generated briefs at each stage. Judges focus on genuine edge cases instead of reading every submission from scratch.

Fellowship programs evaluating researchers, artists, or community leaders through multi-stage processes use Intelligent Cell to analyze written applications, work samples, and reference letters simultaneously. Intelligent Row generates unified candidate profiles combining qualitative insights with quantitative indicators. The iterative refinement capability lets committees test scoring prompts against early submissions and refine before the full volume arrives.

Corporate social responsibility teams running multiple award-based programs — employee scholarships, community grants, volunteer recognition, social innovation competitions — use a single Sopact Sense instance. Contacts unify recipient identities across the entire CSR portfolio. AI analysis applies consistently regardless of program type. Executive dashboards show portfolio-level performance while drilling into program-specific outcomes with evidence drill-through.

Consider a community foundation managing a scholarship program that receives 800 applications annually for 50 awards. Their traditional process required a five-person team spending six weeks reviewing applications — each reading essays, cross-referencing transcripts, and manually scoring recommendation letters against a rubric that judges interpreted differently.

Applications arrived through an online portal. Staff exported data to spreadsheets, manually flagged incomplete files, and sent individual follow-up emails for missing documents. Once files were complete (week 2), judges began reading. Each application required 20 minutes of manual review. By week 4, scoring standards had drifted measurably — average scores declined 0.6 points compared to week 2 for applications of similar quality.

Final committee deliberation required two full-day meetings because panel members couldn't agree on how to weight conflicting judge impressions. When the board asked "why these 50?", the answer was narrative summaries with vague references — no sentence-level proof linking decisions to evidence.

Phase 1: Clean Intake (Week 1) — Contacts form generated unique IDs. All materials linked automatically. System validated document completeness on submission. File completion reached 94% within 5 days of deadline (vs. 71% previously).

Phase 2: AI-Powered Pre-Scoring (Week 2) — Intelligent Cell scored every essay against rubric criteria with sentence-level citations. Intelligent Row generated one-page profiles combining essay insights with transcript highlights and recommendation letter evidence. Judges received pre-scored applications with supporting evidence — review time dropped from 20 minutes to 6 minutes per application.

Phase 3: Calibrated Committee Review (Week 3) — Intelligent Column flagged scoring inconsistencies and geographic disparities. Intelligent Grid generated comparative analyses of the top 80 candidates across all rubric dimensions with supporting quotes. Committee deliberation completed in one half-day session with evidence trails showing exactly how each finalist compared.

Phase 4: Lifecycle Tracking (Ongoing) — Contact IDs persisted. Annual surveys tracked graduation, employment, and community involvement. Three-year outcome data linked back to original application evidence — revealing which rubric dimensions actually predicted long-term success.

The Bottom Line: Review time: 6 weeks → 3 weeks. Per-application review: 20 min → 6 min. Judge score variance: 22% divergence → 7%. Committee meetings: 2 full days → 1 half day. Board confidence: Vague summaries → sentence-level evidence drill-through.

The biggest concern organizations have about switching award management platforms is implementation time. Enterprise tools can take weeks to months. Even dedicated award software like Evalato or Award Force requires configuration time for complex multi-stage programs.

Sopact Sense is designed for rapid deployment. Here's what a typical implementation looks like:

Day 1: Design your application form and define your rubric. Create the intake form using the drag-and-drop builder. Set up Contacts for unique applicant identification. Define scoring criteria — rubric dimensions, weights, anchor-based examples.

Day 1-2: Test with real or synthetic data. Submit 10 test applications. Configure Intelligent Cell prompts to score against your rubric. Run Intelligent Grid to generate a sample report. Refine until AI output matches your expectations.

Day 2-3: Open nominations. Share your application link. As entries arrive, Intelligent Cell scores them automatically with citations. Monitor quality, adjust rubric weights based on real data, iterate before full volume arrives.

Ongoing: Build lifecycle tracking. Add post-award data collection stages — progress reports, outcome surveys, alumni updates — as your program advances. All data links back to the original Contact ID. Track outcomes longitudinally to refine selection criteria between cycles.

No IT department involvement. No vendor customization fees. No waiting for implementation consultants. The platform is self-service by design, with guided onboarding support.