New webinar on 3rd March 2026 | 9:00 am PT

In this webinar, discover how Sopact Sense revolutionizes data collection and analysis.

Build a theory of change model that drives decisions. Learn components, create diagrams, compare vs logic models. 5-step framework with real examples

Build a modern theory of change model that connects strategy, data, and outcomes. Learn how organizations move beyond static logframes to dynamic, AI-ready learning systems — grounded in clean data, continuous analysis, and real-world decision loops powered by Sopact Sense.

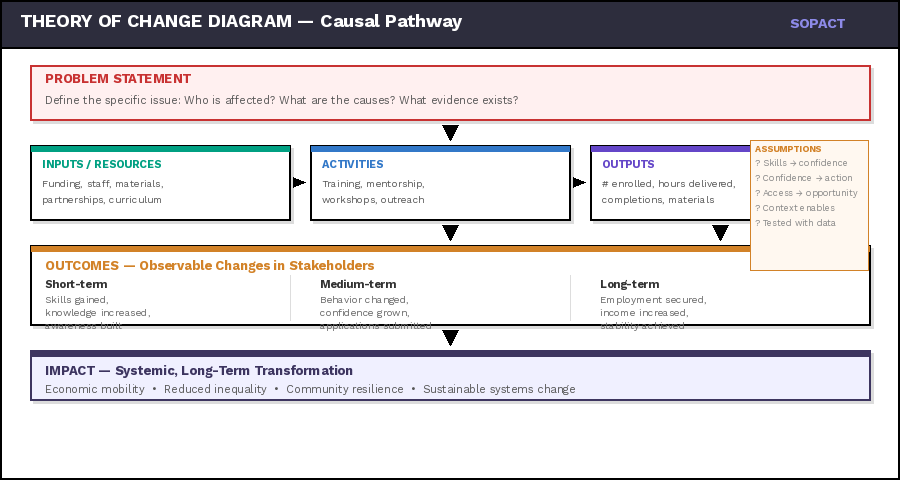

If someone asks you "How does your program create change?", can you explain it clearly? A theory of change model is simply your answer to that question — mapped out so anyone can follow your logic from what you do (activities) to what happens for people (outcomes) to the bigger transformation you're trying to create (impact).

Think of a Theory of Change as a roadmap built on cause-and-effect logic:

"If we do X → then Y will happen → which leads to Z."

For example:

"If we train young women in coding skills (activities) → then they gain confidence and technical abilities (outcomes) → which leads to tech employment and economic mobility (impact)."

It's the story of how change happens — each step logically connected to the next.

The "If → Then" chain in practice:

Every link in the chain answers two questions: How? and Why? How does one step lead to the next? Why do we believe that connection holds true?

Some practitioners call this a "program theory," "results chain," or "outcome mapping" — the labels differ, but the core idea is the same: articulating the causal logic behind your work so that everyone — funders, teams, and communities — can see how and why change happens.

Without this clarity, programs operate on hope. With it, they operate on evidence.

Unmesh Sheth, Founder & CEO of Sopact, explains why Theory of Change must evolve with your data — not remain a static diagram gathering dust.

Funders and boards don't want to hear: "We trained 200 people." They want to know: "Did those 200 people change? How? Why?" A theory of change model forces you to think beyond activities and prove transformation. Without it, you're just reporting how busy you were — not whether you actually helped anyone.

BUILDING BLOCKS

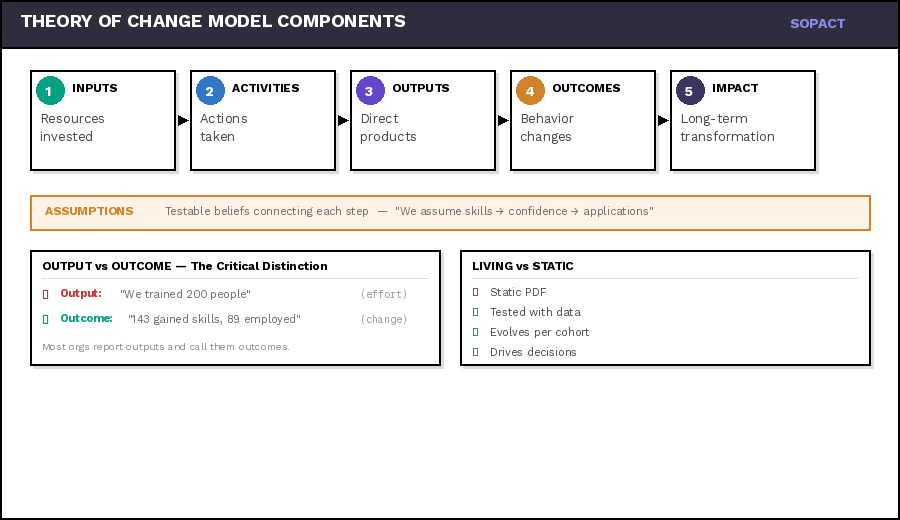

Every theory of change model has the same basic building blocks. Understanding these theory of change components helps you build your own. This visual framework shows how they connect — from what you invest (inputs) to the ultimate transformation you're creating (impact).

A theory of change diagram connects your resources, activities, and assumptions to measurable outcomes and long-term impact — showing not just what you do, but why it works and how you'll know.

1. Inputs — Resources invested to make change happenExample: 3 instructors, $50K budget, laptops, curriculum

2. Activities — Actions your organization takes using inputsExample: Coding bootcamp, mentorship, mock interviews

3. Outputs — Direct, measurable products of activitiesExample: 25 enrolled, 200 hours delivered, 18 completed

4. Outcomes — Changes in behavior, skills, or conditionsExample: 18 gained skills, confidence 2.1→4.3, 12 employed

5. Impact — Long-term, sustainable systemic changeExample: Economic mobility, reduced gender gap in tech

Stakeholder-Centered — Built around the people you serve, not just organizational goals. Real change happens to real people.

Evidence-Based — Grounded in data — both qualitative stories and quantitative metrics that prove change is happening.

Assumption Testing — Identifies what must be true for change to occur, then tests those assumptions continuously.

Causal Pathways — Clear if-then logic showing how activities lead to outcomes, supported by evidence and theory.

A strong Theory of Change isn't created once — it's tested, refined, and strengthened with every piece of data you collect.

Output: "We trained 25 people" (what you did)Outcome: "18 gained job-ready skills and 12 secured employment" (what changed for them)

Most organizations report outputs and call them outcomes. Funders see through this immediately. Your theory of change must focus on real transformation — not just proof you were busy.

Every theory of change model makes assumptions about how change happens: "We assume that gaining coding skills will increase confidence" or "We assume confident participants will actually apply for jobs." These assumptions are testable — and often wrong. A good theory of change makes assumptions explicit so you can test them with data. When assumptions break, your theory evolves.

FRAMEWORK

Now that you understand the components, let's talk about how to actually build a theory of change framework that works. This is where methodology matters — because a beautiful theory of change diagram that sits on a wall is worthless. Your framework needs to be testable, measurable, and useful for making real decisions.

Most organizations build their theory of change framework, THEN try to figure out data collection. By then it's too late — you realize you can't actually measure what your theory claims. Design your measurement system FIRST, then build the theory it can validate. Otherwise your framework stays theoretical forever.

This guide teaches you the foundation. For more advanced resources:

→ AI-Driven Theory of Change Template: Interactive tool that helps you build your theory using AI to identify assumptions, suggest indicators, and design measurement approaches. See the Template tab for the full builder.

→ Theory of Change Examples: Real-world examples from workforce training, education, health, and social services showing different approaches and what makes them effective. See the Examples tab for four complete pathways.

STEP 1

The most common theory of change mistake: confusing what you do (outputs) with what changes for people (outcomes). "Trained 200 participants" is an output. "143 participants demonstrated job-ready skills and 89 secured employment within 6 months" is an outcome. One measures effort, the other measures transformation.

1. Identify Your Stakeholder Groups

Who experiences change because of your work? Not donors or partners — the people your programs serve. Be specific: "low-income women ages 18-24 seeking tech careers" beats "underserved communities."

Example: Workforce training program identifies three stakeholder groups: recent high school graduates, career changers 25-40, and displaced workers 40+. Each group has different starting points and barriers.

2. Define Observable, Measurable Changes

What will be different about stakeholders after your intervention? Use action verbs: demonstrate, gain, increase, reduce, achieve. Avoid vague terms like "empowered" or "transformed" without defining how you'll measure them.

Bad: "Participants will be more confident."Good: "Participants will self-report increased confidence (measured on 5-point scale) and complete at least one job application."

3. Create Outcome Tiers: Short, Medium, Long

Short-term outcomes happen during or immediately after your program. Medium-term outcomes appear 3-12 months later. Long-term outcomes (impact) may take years. Map realistic timelines.

Short-term: Participants complete coding bootcamp with passing test scoresMedium-term: 70% apply for tech jobs within 3 monthsLong-term: 60% employed in tech roles within 12 months, earning 40% more than pre-program

4. Establish Baseline Data Requirements

You can't measure change without knowing where people started. Before your program begins, collect baseline data on every outcome you plan to measure. This requires designing data collection into intake processes.

Baseline Questions: Current employment status? Previous coding experience? Confidence level (1-5 scale)? Barriers to job search? This becomes your "pre" measurement for later comparison.

5. Track Individual Stakeholders Over Time

This is where most theories break: aggregate data without individual tracking can't prove causation. You need to follow Sarah from intake (low confidence, no skills) → mid-program (building confidence, basic skills) → post-program (job offer, high confidence).

Critical: Every stakeholder needs a unique, persistent ID that links their baseline data, program participation, mid-point check-ins, and post-program outcomes. Without this, you're measuring different people at different times — not actual change.

Strong theory of change models track individuals first, then aggregate. Weak models collect anonymous surveys and hope patterns emerge. When you can say "Sarah moved from low confidence to high confidence because of mentor support" AND "67% of participants showed the same pattern," you have evidence-based causation.

STEP 2

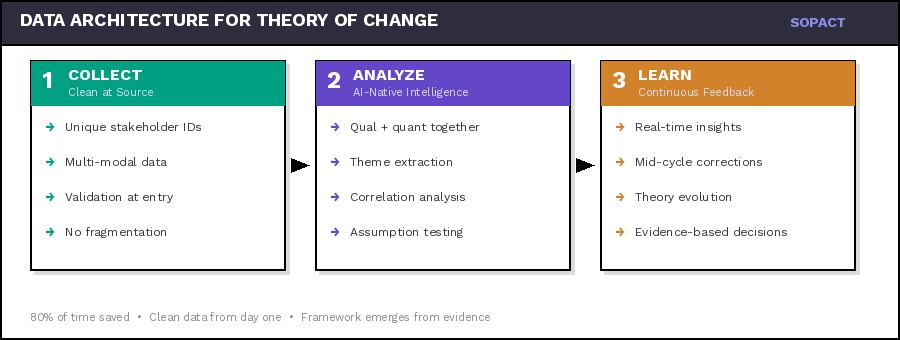

This is where theory of change models die: teams draw beautiful diagrams with arrows showing "skills lead to employment," then realize they collected survey data that can't possibly test that claim. Data architecture must precede theory building — or your theory remains untestable forever.

Organizations use Google Forms for applications, SurveyMonkey for feedback, Excel for tracking, email for documents. When analysis time arrives, you discover: names spelled differently across systems, no way to link the same person's responses, duplicates everywhere, and critical context lost. Teams spend 80% of time cleaning data, 20% analyzing — if they analyze at all.

Unique Stakeholder IDs — Every person gets one persistent identifier that follows them through all program touchpoints. Not email (changes), not name (misspelled) — a system-generated unique ID.

Centralized Collection — All data collection happens in one platform or uses integrated systems with ID synchronization. Fragmentation breaks causation — you can't link Sarah's intake form to her exit survey if they live in different tools.

Longitudinal Tracking — You must collect data at multiple time points: baseline, mid-program check-ins, post-program, follow-up. Each data point links to the same stakeholder ID, creating a timeline of change.

Qualitative + Quantitative Together — Theory of change requires "why" not just "what." Collect numerical data (test scores, employment status) AND narrative data (interviews, open-ended responses, documents) about the same individuals.

Data Quality Mechanisms — Build in validation rules, allow stakeholders to correct their own data via unique links, prevent duplicates at the source. Clean data from the start beats cleaning messy data later.

Analysis-Ready Structure — Data should flow directly from collection to analysis without manual reshaping. If you're exporting CSVs and manually merging in Excel, your architecture is broken.

You can't build a theory of change framework that claims "mentoring increases confidence which leads to job applications" if your data architecture can't track which participants received mentoring, measure their confidence over time, and connect that to actual application behavior. Design the measurement system first, then build the theory it can actually validate.

STEP 3

Numbers tell you what changed. Stories tell you why it changed. A theory of change model that relies solely on quantitative metrics produces correlation without explanation. "Test scores increased 15%" doesn't tell funders or program teams what actually worked. Mixed methods integration — done right — reveals causal mechanisms.

Q1 — Quantitative: What ChangedStructured data showing magnitude of change: test scores, self-reported confidence scales, employment status, application counts, earnings. Collected at baseline, mid-point, post-program. Aggregates to show program-wide patterns.

Q2 — Qualitative: Why It ChangedNarrative data revealing mechanisms: open-ended survey responses, interview transcripts, participant reflections, case study documents. Explains: "I gained confidence because my mentor believed in me and gave me real-world projects to build my portfolio."

M — Mixed: Causation EvidenceIntegration layer connecting numbers to narratives: "67% increased confidence (quant) AND qualitative analysis shows primary driver was mentor support (45% of responses), peer learning (32%), hands-on practice (23%). Now we know WHAT changed and WHY."

1. Collect Both Data Types Simultaneously

Don't separate quantitative surveys from qualitative interviews. In the same data collection moment, ask: "Rate your confidence 1-5" (quantitative) followed by "Why did you choose that rating?" (qualitative). Link both to the same stakeholder ID.

2. Design Questions That Probe Mechanisms

For every quantitative outcome in your theory, ask qualitative questions about process: "Your test score increased from 60% to 85%. What specific aspects of the program helped most?" This reveals which program components actually drive change.

3. Use Qualitative Data to Test Assumptions

Your theory assumes: "Skills lead to job applications." But interviews reveal: "I have skills but I'm too afraid to apply." Qualitative data exposes broken assumptions in your causal chain, allowing you to add missing links (confidence building, application support).

4. Analyze Qualitative Data at Scale

Traditional manual coding of 200 interview transcripts takes months. Modern approaches use AI to extract themes, sentiment, and causation patterns from qualitative data — while maintaining rigor. This makes mixed methods practical even for small teams.

5. Present Integrated Evidence

Don't report quantitative and qualitative findings separately. Integrate them: "Employment increased 40% (quant). Interviews reveal three critical success factors: mentor relationships (mentioned by 78%), portfolio development (65%), and mock interviews (54%) (qual). These become your proven program components."

Quantitative data alone shows correlation: "Participants who attended more mentor sessions had higher job placement rates." But correlation isn't causation — maybe motivated people attend more sessions. Qualitative data reveals the mechanism: "My mentor helped me reframe rejection as learning, which kept me applying until I succeeded." Now you have causal evidence.

STEP 4

Traditional theory of change models treat evaluation as endpoint: collect data all year, analyze in December, report in January. By then, programs have moved on and insights arrive too late. Living theory of change frameworks require continuous analysis — where insights inform decisions while programs are still running. This shift from annual reporting to continuous feedback is essential for effective monitoring and evaluation.

1. Automate Data Flow to Analysis — The moment a survey is submitted or interview transcript uploaded, it should flow directly to your analysis layer — no manual export/import.

2. Create Milestone Check-Ins — Don't wait until program end. Build check-ins at 25%, 50%, 75% completion. Adjust while there's time to matter.

3. Use AI for Immediate Qualitative Analysis — AI-powered analysis can extract themes, sentiment, and insights within minutes of data collection — making qualitative feedback actionable in real-time.

4. Empower Teams with Self-Service Insights — Program managers should be able to ask questions and get answers immediately without technical skills.

5. Test Assumptions Iteratively — Continuous data lets you test assumptions with progressively larger cohorts. Theory evolves based on evidence.

Organizations using continuous learning systems make better decisions because insights arrive while they matter. Discovering that mentor sessions drive 80% of outcomes mid-program lets you reallocate resources immediately. Learning the same thing in an annual report means another cohort missed the benefit.

STEP 5

Static theory of change models become wall decorations. Living theory of change frameworks adapt as evidence accumulates: assumptions get validated or revised, causal pathways get strengthened or rerouted, and new context gets incorporated. Evolution requires systematic feedback — not annual strategic retreats.

v1 — Hypothesis Stage — Initial theory based on research, similar programs, and logic. Testable but unproven. Data collection architecture designed to validate each link.

v2 — Validation Stage — First cohort evidence reveals what holds true. Skills increased ✓, Confidence increased ✓, BUT Applications didn't follow. Theory evolves: add resume workshops, mock interviews, accountability partners.

v3 — Refinement Stage — More cohorts reveal nuance: mentor relationships correlate with 80% of successful outcomes. Theory becomes specific about what works.

v4 — Segmentation Stage — Evidence shows different paths for different people. Theory branches: same outcomes, differentiated pathways by stakeholder segment.

v5 — Predictive Stage — Sufficient data enables prediction: Based on intake profile, theory predicts which interventions each person needs. Theory becomes operational framework.

A theory of change should never be finished. Every new cohort tests assumptions. Every context shift requires adaptation. The difference between organizations that prove impact and those that hope for it: systematic evolution based on stakeholder evidence, not stubborn adherence to original diagrams.

Don't change your theory every time one data point surprises you — that's not evolution, that's chaos. Real evolution requires: sufficient sample size, consistent patterns across cohorts, qualitative data explaining mechanisms, and deliberate hypothesis testing. Change based on evidence, not anecdotes or assumptions.

IMPLEMENTATION

Understanding theory of change methodology is one thing. Actually implementing it — with clean data, continuous analysis, and real-time adaptation — requires specific technical infrastructure. Most organizations discover too late that their existing tools can't support the theory of change framework they've designed.

Stakeholder Tracking System — Platform that assigns unique IDs, maintains contact records, and links all data collection to those IDs — like a lightweight CRM built for impact measurement.

Integrated Data Collection — Surveys, forms, interviews, documents all flow into one system — not scattered across Google Forms, SurveyMonkey, email, and folders.

Longitudinal Data Structure — Database architecture that links baseline → mid-program → post-program → follow-up data for the same individuals, preserving timeline and context.

Qualitative Analysis at Scale — AI-powered tools that extract themes, sentiment, causation patterns from open-ended responses, interviews, and documents — without months of manual coding.

Real-Time Analysis Layer — Insights available immediately after data collection — not batch processed quarterly. Enables continuous learning and mid-program adjustments.

Self-Service Reporting — Program teams can generate reports, test hypotheses, and explore data without technical expertise or bottlenecking through one analyst.

Traditional survey tools (SurveyMonkey, Google Forms, Qualtrics) collect data but lack stakeholder tracking and mixed-methods analysis. CRMs track people but aren't built for outcome measurement. BI tools analyze but can't fix fragmented data. Sopact Sense was designed specifically for theory of change implementation: persistent stakeholder IDs (Contacts), clean-at-source collection, AI-powered Intelligent Suite for qualitative + quantitative analysis, and real-time reporting — all in one platform. It's not about features. It's about architecture that makes continuous, evidence-based theory of change actually possible.

You can build the most brilliant theory of change framework on paper. But without infrastructure that tracks stakeholders persistently, integrates qual + quant data, and delivers insights while programs run, your theory stays theoretical. Most organizations discover this after wasting a year collecting unusable data. Design the measurement system first — then build the theory it can validate.

FRAMEWORK COMPARISON

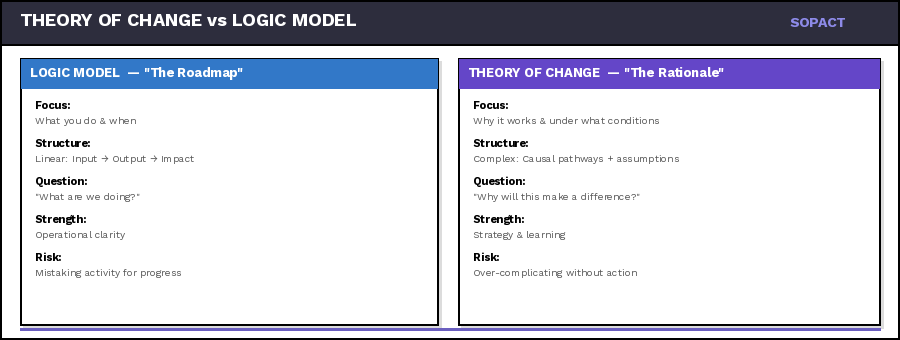

Both frameworks aim to make programs more effective, but they approach the challenge from opposite directions: Logic Model describes what a program will do, while Theory of Change explains why it should work. Understanding the difference between theory of change and logic model is essential for designing effective measurement systems.

A structured, step-by-step map that traces the pathway from inputs and activities to outputs, outcomes, and impact. It provides a concise visualization of how resources are converted into measurable results.

This clarity makes it excellent for operational management, monitoring, and communication. Teams can easily see what's expected at each stage and measure progress against milestones.

📍 Shows the MECHANICS of a program

Operates at a deeper level — it doesn't just connect the dots, it examines the reasoning behind those connections. It articulates the assumptions that underpin every link in the chain.

Rather than focusing on execution, it focuses on belief: what has to be true about the system, the people, and the context for change to occur. It reveals what matters — the conditions that determine if outcomes are sustainable.

🧭 Shows the LOGIC of a program

Logic Model Gives You: Precision in implementation. A tool for tracking progress, ensuring accountability, and communicating what your program delivers at each stage.

Theory of Change Gives You: A compass for meaning. A framework for understanding why your work matters, surfacing assumptions, and connecting data back to purpose.

Without Logic Model: You risk losing operational clarity, making it hard to monitor progress, communicate results, or maintain accountability with funders.

Without Theory of Change: You risk mistaking activity for impact, overlooking the underlying factors that determine whether outcomes are sustainable.

The best impact systems keep both alive — Logic Model as a tool for precision, Theory of Change as a compass for meaning. Together, they transform measurement from a compliance exercise into a continuous learning process.

Get answers to the most common questions about developing, implementing, and using Theory of Change frameworks for impact measurement.

NOTE: Write these as plain H3 + paragraph text in Webflow rich text editor. The JSON-LD schema goes separately in Webflow page settings → Custom Code (Head) via component-faq-toc-schema.html.

The term "theory of change model" refers to the visual or conceptual framework that illustrates your causal pathway. It's the diagram, flowchart, or narrative document that maps how inputs lead to impact. Common formats include logic models, results chains, outcome maps, and pathway diagrams. The specific format matters less than clarity: Can your team, funders, and stakeholders understand the pathway? Can you test assumptions with data?

Avoid confusion: "Model" and "framework" are often used interchangeably. Both describe the structure; what matters is whether your model is static (drawn once, rarely revised) or dynamic (continuously validated with evidence).

A logic model is a structured map showing inputs, activities, outputs, outcomes, and impact in a linear flow. It's operational and monitoring-focused, designed to track whether you delivered what you promised. A Theory of Change goes deeper by explaining how and why change happens. It surfaces assumptions, contextual factors, and causal pathways that connect your work to outcomes. Think of the logic model as the skeleton and Theory of Change as the full body — one gives structure, the other gives meaning.

Sopact approach: We treat them as complementary. Use a logic model for program tracking, but embed it within a Theory of Change that includes learning loops, stakeholder feedback, and adaptive mechanisms powered by clean, continuous data.

A comprehensive Theory of Change includes six core components: (1) Inputs — resources invested; (2) Activities — what you do with those resources; (3) Outputs — direct products of activities; (4) Outcomes — changes in behavior, knowledge, skills, or conditions; (5) Impact — long-term systemic change; and (6) Assumptions & Context — what must be true for this pathway to work.

Often forgotten: Feedback loops. The most effective ToCs include mechanisms for continuous learning — regular check-ins, stakeholder input, and data-driven adjustments — so the model evolves as reality unfolds.

Start with the smallest viable statement of change: Who are you serving? What needs to shift? How will you contribute? Don't aim for perfection — aim for measurable and adaptable.

Four-step iterative process: (1) Map the pathway — identify inputs, activities, outputs, outcomes, and impact. (2) Surface assumptions — what must be true for this pathway to work? (3) Instrument data collection — design surveys, interviews, and tracking systems that test your assumptions from day one. (4) Review quarterly — let evidence challenge your model.

In M&E practice, Theory of Change serves as the blueprint for what to measure and why. It defines which indicators matter, what assumptions need testing, and how outcomes connect to long-term impact. Without a clear ToC, M&E becomes compliance theater — tracking outputs that nobody uses.

Key shift: Stop treating M&E as backward-looking compliance. Instead, instrument your Theory of Change with clean-at-source data collection so feedback informs decisions during the program cycle, not months after it ends.

Theory of Change is a system of thinking that describes how and why change happens in your context. It's not a document or diagram — it's a hypothesis about transformation that you test with evidence. At its core, ToC answers three questions: What needs to change? How will your actions create that change? What assumptions must be true for success?

A theory of change diagram visualizes the causal pathway from problem to impact. Start by placing your long-term goal (impact) at the top, then work backward: What outcomes must occur? What outputs must your activities produce? What inputs are needed? Draw arrows showing causal connections, and annotate each arrow with the assumption that must hold true.

Practical tip: Don't overcomplicate the diagram. Five to seven boxes with clear arrows is enough. The real value isn't in the diagram's complexity — it's in making your assumptions visible so you can test them with data.

Outputs are the direct, countable products of your activities — what you delivered. Outcomes are the changes that happened in people's lives because of what you delivered. "We trained 25 people" is an output. "18 gained job-ready skills and 12 secured employment" is an outcome. Your theory of change must push past outputs to measure real transformation.

Education programs use Theory of Change to connect teaching activities to learning outcomes and life changes. The key is measuring both skill acquisition and behavioral change — track attendance and test scores alongside qualitative signals like student confidence and parent engagement.

Common pitfall: Education ToCs often stop at outputs (students trained) rather than outcomes (skills applied, confidence gained). Instrument feedback loops at baseline, midpoint, and completion to capture transformation.

For nonprofits, a theory of change provides clarity about how programs create impact — moving beyond "we did things" to "here's the evidence our work transforms lives." For funders, it provides a testable hypothesis they can evaluate with data rather than anecdotes. Organizations that adopt living, data-driven theories of change report faster funder renewals, stronger grant applications, and better outcomes for the people they serve.

Are you looking to design a compelling theory of change template for your organization? Whether you’re a nonprofit, social enterprise, or any impact-driven organization, a clear and actionable theory of change is crucial for showcasing how your efforts lead to meaningful outcomes. This guide will walk you through everything you need to create an effective theory of change, complete with examples and best practices.

While ToC software can greatly facilitate the process, the core of an effective Theory of Change lies in its design. Here are some key principles to keep in mind:

As highlighted in the provided perspective, the field of impact measurement is evolving. While various frameworks like Logic Models, Logframes, and Results Frameworks exist, they all serve a similar purpose: mapping the journey from activities to outcomes and impacts.

Key takeaways for the future of impact frameworks include:

Theory of Change is a powerful tool for social impact organizations, providing a clear roadmap for change initiatives. By understanding the key components of a ToC, leveraging software solutions like SoPact Sense, and focusing on stakeholder-centric, data-driven approaches, organizations can maximize their impact and continuously improve their strategies.

Remember, the true value of a Theory of Change lies not in its perfection on paper, but in its ability to guide real-world action and adaptation. By embracing a flexible, stakeholder-focused approach to ToC development and impact measurement, organizations can stay agile and responsive in their pursuit of meaningful social change.

To learn more about effective impact measurement and access detailed resources, we encourage you to download the Actionable Impact Measurement Framework ebook from SoPact at https://www.sopact.com/ebooks/impact-measurement-framework. This comprehensive guide provides in-depth insights into developing and implementing effective impact measurement strategies.

Real pathways. Real metrics. Real feedback.

Most theory of change examples die in PowerPoint. These live in data.

Every example below connects assumptions to evidence. You'll see what teams measure, how stakeholders speak, and which metrics predict lasting change. Copy the pathway structure, swap your context, and instrument it in minutes—not months.

By the end, you'll have:

Let's begin where most theories break: when assumptions meet reality.

🎯 Before You Copy: Each example is a starting hypothesis, not gospel. Treat the pathway as a scaffold: customize inputs, add context-specific assumptions, and version your evidence plan as you learn. What matters is clean IDs, related forms, and quarterly reflection on what surprised you.