What Is AI-Ready Data Management for Nonprofits?

Last updated: August 2025

By Unmesh Sheth — Founder & CEO, Sopact

Nonprofits are drowning in data but starving for insight. Annual surveys sit in Google Forms, case notes hide in Word docs, and PDFs pile up in shared drives. By the time staff reconcile it all, the context has already changed. This is why AI-ready data management has become essential: it turns disconnected data into continuous, clean, and actionable streams.

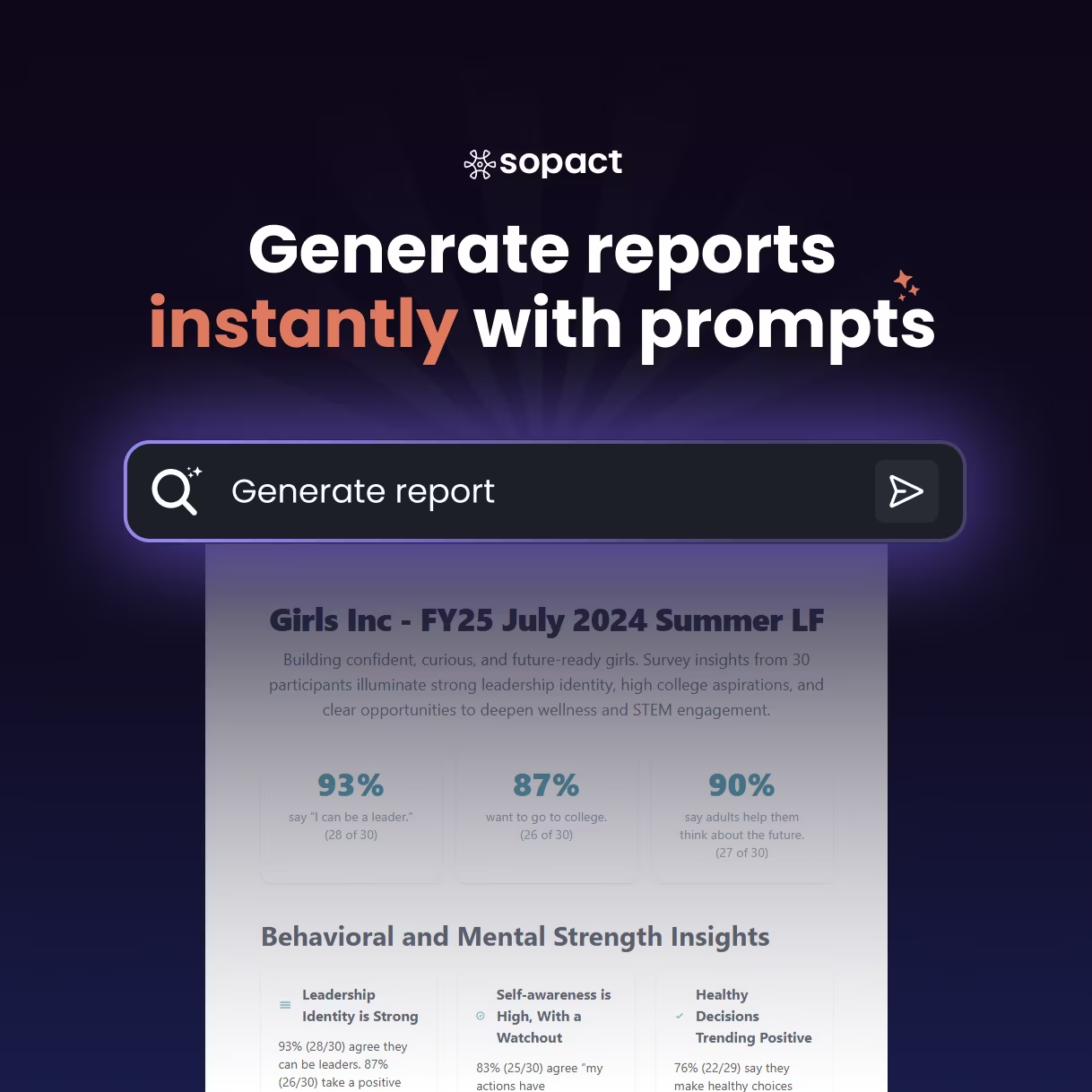

With Sopact Sense, nonprofits move from spreadsheets and silos to a centralized, AI-native system where every stakeholder story, outcome, and metric flows into a single feedback loop.

Why Does AI-Ready Data Management Matter for Nonprofits?

Traditional systems like Excel, SurveyMonkey, or basic CRMs promise simplicity but deliver fragmentation. Analysts often spend up to 80% of their time cleaning and reconciling data instead of analyzing it. For nonprofits with limited staff and resources, this is unsustainable.

AI-ready management flips the script. Data is collected clean at the source—using unique respondent IDs, validation, and automated correction. Once inside Sopact Sense, every entry becomes usable for analysis, dashboards, and storytelling.

How Does Sopact Sense Transform Nonprofit Data Workflows?

At its core, Sopact Sense connects quantitative surveys, qualitative feedback, and uploaded documents into one pipeline. Unlike legacy tools, it doesn’t just store data—it makes it analysis-ready.

Key capabilities include:

- Unique IDs: Prevent duplicates across surveys, forms, and interviews.

- Intelligent Cell™: Auto-codes open text, PDFs, and transcripts into themes and rubric scores.

- Real-Time Dashboards: Update as soon as new data is collected, no manual reconciliation needed.

- Audit Trails: Every insight is linked back to its evidence for compliance and trust.

Before vs. After: Data Without vs. With Sopact Sense

Before: Legacy Tools

Surveys in Google Forms.

Case notes in Word/Excel.

Analysts spend weeks cleaning data.

Reports arrive months late.

After: Sopact Sense

All inputs linked by unique IDs.

Open text auto-coded into themes.

Dashboards update in real time.

Evidence linked for compliance.

What Tools and Features Make Data Truly AI-Ready?

Being AI-ready is not about hype—it’s about design. Tools like Sopact Sense integrate AI directly into workflows:

- Intelligent Columns™: Compare survey metrics (like confidence levels) with open-ended responses in seconds.

- Intelligent Grids™: Analyze multiple cohorts, demographic splits, and longitudinal data in a BI-ready format.

- Rubric Automation: Apply your existing frameworks to qualitative inputs consistently, at scale.

- Continuous Feedback: Turn stakeholder voices into living datasets, not static reports.

FAQ: AI-Ready Data Management for Nonprofits

Frequently Asked Questions

What does AI-ready data mean for nonprofits?

It means data is captured clean, structured, and de-duplicated from the start—ready for automated analysis and dashboards. Nonprofits no longer waste months cleaning spreadsheets.

Why can’t traditional tools like Excel or SurveyMonkey deliver this?

They create silos and duplicates. Without unique IDs or AI integration, qualitative insights get lost and quantitative data becomes disconnected.

How does Sopact Sense ensure transparency?

Every score, theme, or insight is linked to its original evidence. Dashboards include traceable excerpts, making them defensible to funders and auditors.

Conclusion: From Fragmentation to Continuous Intelligence

AI-ready data management is no longer optional. Nonprofits that adopt centralized, clean, and continuous systems gain faster insights, stronger accountability, and the ability to improve programs in real time.

With Sopact Sense, what once took months now takes days. Reports are not static—they are living intelligence systems. That shift doesn’t just prove impact; it accelerates it.

Explore how Sopact Sense can help unify your data and amplify your mission.

Nonprofit Data Management — Frequently Asked Questions

Data Ops Modern nonprofit data management means clean-at-source collection, unique IDs, centralized governance, and integrated qualitative + quantitative evidence that’s BI-ready. Use this FAQ to align teams on foundations, privacy, integrations, and continuous learning.

What does “nonprofit data management” actually include?

It covers the full lifecycle: defining data standards and IDs, collecting clean data at the source, storing it in a centralized hub, governing privacy and access, and transforming it into analysis and reporting. For nonprofits, that means unifying forms, interviews, PDFs, case notes, and partner files across programs and cohorts. The goal is a single source of truth that supports faster learning and credible reporting.

Why are unique IDs essential for nonprofits?

Unique IDs prevent duplicates and connect every record across timepoints—intake, midline, exit, and follow-up. With one ID per participant, organization, or site, surveys, uploads, and notes become one linked story. This enables longitudinal analysis, reduces cleanup, and makes BI dashboards trustworthy.

How do we keep data clean without adding staff burden?

Validate at the source with required fields, controlled vocabularies, and guided corrections. Use unique links to eliminate duplicate submissions and embed light workflows for clarifications. Centralized rules (data dictionary, picklists) ensure consistency across forms and cohorts, cutting cleanup dramatically.

What’s the role of qualitative data in a nonprofit data stack?

Narratives explain the numbers. Interviews, focus groups, and open-text responses reveal the “why” behind outcome shifts, risks, and barriers. When clustered and aligned to metrics, qualitative insights drive better decisions than scores alone, especially for funder diligence and learning agendas.

How should nonprofits think about integrations and BI tools?

Prioritize a hub-and-spoke model: collect and standardize in one central hub, then publish to BI (e.g., Power BI, Looker Studio) as needed. Keep schemas stable and governance clear so dashboards don’t break with every change. This reduces IT tickets and supports rapid iteration.

What governance and privacy practices are must-haves?

Minimize PII, apply role-based access, and document consent and retention. Use audit trails for edits, and monitor data quality (missingness, outliers, duplicates). Clear data ownership, change logs, and a living data dictionary keep everyone aligned and compliant.

How do we align data with outcomes for funders and boards?

Start with 3–5 priority outcomes and define leading/lagging indicators for each. Pair short scales with a “why” prompt to capture context, then map internal KPIs to frameworks (SDGs, IRIS+) for comparability. This makes reporting credible while keeping frontline tasks simple.

How often should we collect data to stay useful but not intrusive?

Match the cadence to decisions: lightweight pulse checks at milestones, deeper surveys per term or cohort, and targeted follow-ups post-program. Keep instruments short and consistent, and embed them into existing workflows so response quality stays high.

Where do spreadsheets and legacy CRMs fit in a modern stack?

They can remain as systems of convenience, but the central hub should be the system of record. Sync via stable pipelines, standardize fields, and avoid manual copy-paste. Over time, reduce bespoke sheets to lower risk of version drift and data loss.

What’s the quickest path to get started without a big rebuild?

Pilot one program with a clean form set tied to unique IDs. Centralize incoming data, add one “why” field to key scales, and publish a small, living dashboard. Run monthly learning huddles to act on findings and refine instruments before expanding.

How does Sopact help with nonprofit data management specifically?

Sopact centralizes forms, IDs, documents, and qualitative inputs in one hub. Intelligent Suite analyzes open-text and interviews in minutes and aligns themes with outcomes. Data becomes BI-ready by design, so teams iterate 20–30× faster and report with confidence.

How do we avoid vendor lock-in as we scale our data practice?

Keep your schema, IDs, and dictionaries portable; prefer open formats and documented exports. Separate collection and analytics concerns so you can publish to any BI tool. With clean, standardized data at the core, you can evolve tools without restarting from zero.