New webinar on 3rd March 2026 | 9:00 am PT

In this webinar, discover how Sopact Sense revolutionizes data collection and analysis.

Use theory of change in monitoring and evaluation to test program logic continuously. AI-powered ToC monitoring with real examples and frameworks.

You built a beautiful theory of change diagram. Inputs flow to activities flow to outcomes flow to impact. The logic looks clean on a whiteboard. Then your program launches, and that diagram never gets looked at again.

This is the core problem with how most organizations use theory of change in monitoring and evaluation. The ToC becomes a planning artifact — something created for a grant proposal or board presentation — while actual program decisions happen based on gut instinct, anecdotal feedback, or whatever data someone managed to pull from three different spreadsheets last Tuesday.

The disconnect is not a people problem. It is an architecture problem. When your data collection tools do not connect to your outcome framework, when qualitative feedback stays trapped in documents nobody codes, and when pre/post survey data requires hours of manual merging before anyone can ask "is our pathway working?" — your theory of change cannot function as what it is meant to be: a testable hypothesis about how change happens.

In 2026, organizations that treat their ToC as a living system — tested continuously against real data, updated when assumptions fail, and connected to decisions that happen while programs are still running — will outperform those still treating it as a static PDF. This guide shows you how to make that shift using Sopact Sense, an AI-native platform that connects your theory of change logic directly to clean, longitudinal data.

Unmesh Sheth, Founder & CEO of Sopact, explains why Theory of Change must evolve with your data — not remain a static diagram gathering dust.

Theory of change in monitoring and evaluation is the practice of using your program's causal logic — the pathway from activities to outcomes to impact — as an active framework for tracking progress, testing assumptions, and improving interventions in real time. Rather than treating the ToC as a one-time planning document, theory of change monitoring embeds your if-then logic into your data collection, analysis, and reporting workflows so every piece of evidence you gather either validates or challenges your program hypothesis.

A theory of change maps the causal pathway: if we do X, then Y will happen, which leads to Z. Monitoring and evaluation provides the data infrastructure: systematic tracking of outputs, outcomes, and impact. When you combine them, you get something neither delivers alone — a continuous learning system where your program logic is tested against reality as evidence accumulates, not just reported against at the end of a funding cycle.

Three shifts make theory of change monitoring more critical — and more achievable — than ever before. First, funders are moving from output counting to outcome verification; they want evidence that your pathway works, not just proof that you implemented activities. Second, AI-powered qualitative analysis now makes it possible to code open-ended feedback in minutes rather than weeks, meaning you can actually test the "why" behind your outcomes at scale. Third, participants expect their voices to shape programs, not just decorate reports — and continuous ToC testing is the mechanism for that responsiveness.

Assumption testing is the core of theory of change in evaluation. Your ToC contains assumptions — that participants will engage, that skills transfer to behavior change, that behavior change leads to improved conditions. Each assumption needs an indicator and a data source. When data contradicts an assumption, you update either the intervention or the logic model.

Causal pathway validation means tracking whether each step in your ToC actually connects to the next. Do your activities produce the outputs you expected? Do those outputs lead to the outcomes you predicted? This requires longitudinal data — following the same participants over time — which demands clean data architecture with unique identifiers from day one.

Feedback integration closes the loop. Quantitative metrics tell you what is happening; qualitative feedback from participants tells you why. A living theory of change monitoring system integrates both, so you do not just know that confidence scores increased — you understand that participants attribute the increase to peer mentorship, not the curriculum itself.

Most organizations invest significant effort building their theory of change during proposal development. The logic model gets reviewed, refined, and approved. Then the program launches, and nobody connects the actual data flowing in to the assumptions mapped on the wall. Survey questions do not align with ToC indicators. Qualitative data collection captures stories but not the specific evidence needed to test causal links. The gap between what the ToC says should happen and what the data actually shows remains invisible because nobody has the time or infrastructure to make the comparison.

Testing whether your ToC pathway holds requires connecting data across time points and data types. Did the person who reported increased confidence at mid-point actually secure employment at follow-up? Answering this requires linking pre, mid, and post data for the same individual. When your intake form is in Google Forms, your mid-point survey is in SurveyMonkey, and your follow-up tracking is in a spreadsheet, this linking is either impossible or requires weeks of manual data merging. The result: you cannot test your causal pathway because your data architecture does not support it.

Theory of change evaluation needs qualitative evidence. Numbers tell you confidence scores went up; interviews tell you why. But most organizations collect qualitative feedback and then do nothing with it at scale. Interview transcripts sit in folders. Open-ended survey responses get skimmed for cherry-picked quotes. Nobody systematically codes 500 open-ended responses to find patterns that validate or challenge the ToC pathway. Traditional qualitative data analysis takes too long, so the richest evidence your participants provide never reaches the decision table.

Sopact Sense is an AI-native platform that solves the three problems above by connecting clean data collection, automated qualitative analysis, and continuous reporting in a single system. Here is how it transforms theory of change in monitoring and evaluation from a static exercise to a live learning loop.

Every participant gets a persistent unique identifier in Sopact Contacts. When that participant completes an intake survey, a mid-program check-in, and an exit assessment, all three data points link automatically. No manual merging. No duplicate records. No Excel chaos. This clean-at-source architecture is the prerequisite for ToC pathway testing — you cannot validate cause-and-effect if you cannot follow individuals through your program logic over time.

Sopact's four-layer Intelligent Suite turns raw data into ToC-relevant insights in minutes:

Intelligent Cell extracts themes, scores, and categories from individual open-ended responses. If a participant writes "the mentorship sessions gave me confidence to apply for jobs," Intelligent Cell can classify this as evidence for your "mentorship → confidence → job readiness" pathway.

Intelligent Column analyzes patterns across all responses in a single metric. It reveals what participants collectively say about confidence, barriers, or program strengths — giving you aggregate evidence for each node in your ToC.

Intelligent Grid cross-analyzes multiple variables simultaneously. It can show whether participants who reported high mentorship engagement also showed higher confidence gains and better employment outcomes — testing your complete causal chain in one view.

Intelligent Row provides individual-level longitudinal views, so you can trace a single participant's journey through your entire ToC pathway from intake to impact.

Sopact generates live, shareable impact reports that align directly to your theory of change logic. Instead of waiting months for an evaluation consultant to write a report, program managers see whether their ToC pathway is working as data comes in. This enables the most important shift: from retrospective evaluation to prospective learning. You adapt programs based on evidence while there is still time to make a difference.

One of the most common points of confusion in monitoring and evaluation is the relationship between a theory of change, a logic model, and a results framework. They are related but serve different purposes, and understanding the distinction matters for how you design your data collection.

A theory of change articulates why change happens — the causal logic, assumptions, and conditions that must hold for your program to produce its intended outcomes. It is explanatory and includes the contextual factors, risks, and if-then reasoning that connect activities to impact.

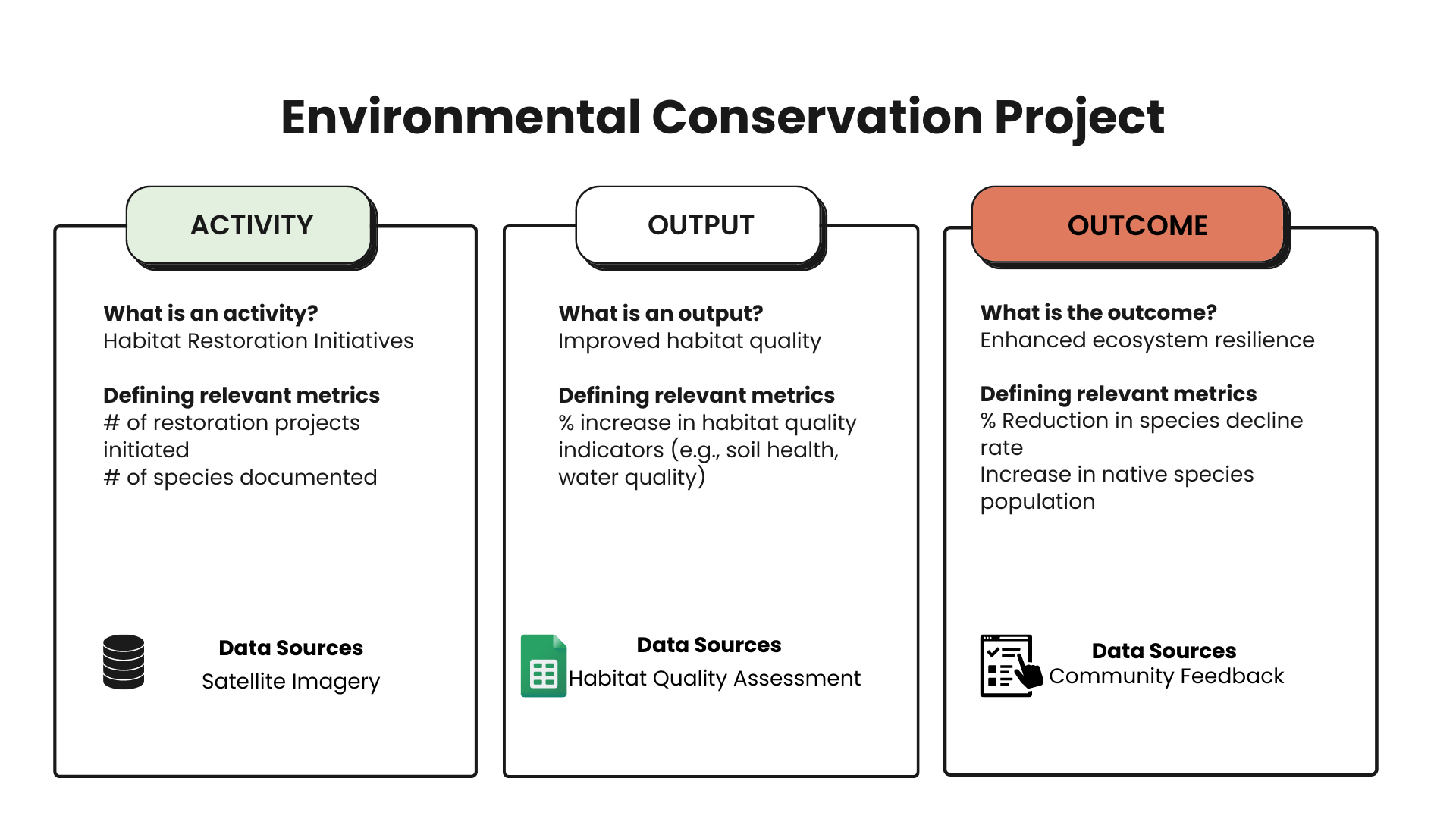

A logic model maps what your program does — inputs, activities, outputs, outcomes, impact — in a linear visual format. It is descriptive and shows the operational pathway without necessarily explaining the causal mechanisms behind it.

A results framework defines how you will measure progress — the specific indicators, targets, data sources, and collection methods for each level of your program logic. It is operational and tells M&E teams exactly what data to collect and when.

The best approach uses all three together: your theory of change provides the "why," your logic model provides the "what," and your results framework provides the "how." When connected to a platform like Sopact Sense, these three layers become a testable system rather than separate documents in different folders.

The ToC Logic: Technical training → skill acquisition → confidence building → job placement → economic mobility.

The Testing Challenge: The organization tracked training hours (output) and job placements (outcome), but had no data on the intermediate steps — whether skills actually improved, whether confidence genuinely changed, and what specific program elements participants credited for their progress.

With Sopact: Participants completed linked pre, mid, and post surveys through unique IDs. Intelligent Cell analyzed open-ended responses about confidence drivers. Intelligent Column correlated quantitative skill assessments with qualitative feedback. The result: the team discovered that hands-on project work — not classroom instruction — was the primary driver of both confidence and placement. They restructured the curriculum mid-year, and the next cohort's placement rate improved significantly.

The ToC Logic: After-school tutoring → improved academic performance → higher graduation rates → career readiness.

The Testing Challenge: Tutoring attendance was tracked but disconnected from academic outcomes. Teachers provided qualitative feedback in narrative reports that nobody analyzed systematically. The organization could report outputs (sessions delivered) but could not demonstrate the causal pathway.

With Sopact: Student IDs linked tutoring attendance data to academic performance indicators and qualitative feedback from teachers and students. Intelligent Grid cross-analyzed attendance patterns, grade improvements, and thematic feedback. The insight: students who attended consistently but whose grades did not improve had a common pattern in their feedback — they needed subject-specific support, not general tutoring. The program added targeted math and reading specialists, and academic outcomes improved within one semester.

The ToC Logic: Capacity-building grants → stronger organizational practices → better program delivery → improved community outcomes.

The Testing Challenge: Grantees submitted annual reports in different formats. The foundation could not aggregate qualitative feedback across the portfolio or test whether capacity-building investments actually correlated with improved community outcomes two years later.

With Sopact: Standardized progress surveys with linked longitudinal tracking allowed the foundation to compare grantee trajectories. Intelligent Grid aggregated qualitative and quantitative data across the portfolio, revealing which types of capacity investment — training vs. coaching vs. infrastructure funding — correlated with sustained outcomes. The foundation reallocated resources toward coaching, which showed the strongest evidence chain.

Here is a practical framework for moving from a static theory of change to a continuous monitoring system.

For each node in your theory of change, define at least one quantitative indicator and one qualitative evidence source. Your activities node might track sessions delivered (quantitative) and facilitator observations (qualitative). Your outcomes node might track skill assessment scores (quantitative) and participant narratives about behavior change (qualitative).

The key discipline: if you cannot define how you will collect evidence for a ToC node, either the node is too vague or your data collection plan has a gap.

Every survey question should connect to a specific assumption in your theory of change. If your ToC assumes that "training increases confidence," you need a confidence measure at intake and exit. If your ToC assumes that "confidence leads to job-seeking behavior," you need a job-seeking behavior measure at follow-up.

Include open-ended questions that let participants explain why change happened or did not happen. This qualitative evidence is what makes your ToC monitoring rigorous rather than superficial.

You cannot test a causal pathway without following individuals over time. Assign every participant a unique identifier when they enter your program. Link all subsequent data points — surveys, assessments, administrative records — to that ID. Sopact Contacts handles this automatically, but the principle applies regardless of your platform: longitudinal tracking is the backbone of theory of change evaluation.

Set up automated analysis touchpoints. At mid-point, run Intelligent Column to see whether early outcome indicators are moving. At each data collection wave, run Intelligent Grid to check whether your causal pathway holds. Do not wait until the program ends to discover that your second ToC node is not connecting to your third.

This is the step most organizations skip. When data shows that an assumption is wrong — that confidence does not actually lead to job-seeking behavior, for example — update your theory of change. Add the missing intermediate step. Revise the causal logic. Document what you learned. A theory of change that never changes based on evidence is not a theory — it is a wish.

Theory of change in monitoring and evaluation is the practice of embedding your program's causal logic into your data collection and analysis workflows. Rather than treating the ToC as a static planning document, you use it as a testable framework — collecting evidence at each node, validating assumptions continuously, and updating the logic model when data shows that your pathway needs revision. It transforms M&E from a compliance exercise into a learning system.

A theory of change explains why change happens — including causal mechanisms, assumptions, and contextual conditions. A logic model describes what a program does — inputs, activities, outputs, outcomes — in a linear visual. Think of it this way: a logic model is a map of your program's steps, while a theory of change is the argument for why those steps produce change. The best M&E systems use both together.

The core components of a theory of change include: the problem statement (what you are addressing), activities (what you do), outputs (what you produce), outcomes (the changes that result), impact (long-term transformation), assumptions (what must hold for the pathway to work), and indicators (how you measure progress at each level). Strong ToC models also include stakeholder perspectives, external conditions, and explicit causal linkages between each node.

Update your theory of change whenever evidence contradicts your assumptions. If mid-program data shows an expected outcome is not materializing, that signals a need to revise either the intervention or the causal logic. With continuous data collection and AI-powered analysis, many organizations review their ToC quarterly. The key principle: your theory of change should evolve as fast as your understanding of what works.

Yes. Qualitative feedback from even 15-20 participants can reveal whether your causal pathway holds, especially when analyzed systematically. Sopact's Intelligent Cell extracts themes from open-ended responses at any scale. Small programs often benefit most from continuous ToC monitoring because every cohort is a learning opportunity — you cannot afford to waste an entire year discovering your pathway does not work.

A theory of change provides the explanatory logic — why and how your program creates change. A results framework translates that logic into measurable terms — specific indicators, targets, data sources, and collection schedules for each level of your program model. Think of the theory of change as the hypothesis and the results framework as the measurement plan for testing it.

Each assumption in your ToC needs a corresponding indicator and evidence source. If you assume "skills training leads to confidence," measure confidence at multiple time points and collect qualitative evidence about what drives confidence changes. Compare actual patterns against predicted ones. Sopact's Intelligent Grid cross-analyzes quantitative and qualitative data simultaneously, showing whether the causal connections you assumed are actually present in your data.

Qualitative data is essential for understanding why your ToC pathway works or fails. Quantitative metrics show what happened; qualitative evidence from participants explains the mechanisms. For example, if job placement rates increased, qualitative feedback reveals whether that was due to your skills training, your mentorship component, or external market conditions. Without qualitative analysis, theory of change evaluation stays superficial.

👉 With Sopact Sense, InnovateEd connects student grades, teacher feedback, and survey data to continuously test whether curriculum changes lead to improved STEM participation.

👉 Sopact Sense allows HealCare to integrate clinic records with patient narratives, so qualitative feedback (“I trust the mobile clinic”) is analyzed alongside biometric data.

👉 With Sopact Sense, GreenEarth aligns biodiversity surveys with community interviews, giving funders both ecological metrics and human stories of change.