Open-ended question examples that produce analyzable insight—not rambling responses. See 50+ templates for surveys, interviews, and research with explanations of what makes each effective.

Data teams spend the bulk of their day fixing silos, typos, and duplicates instead of generating insights.

Data teams spend the bulk of their day fixing silos, typos, and duplicates instead of generating insights.

Hard to coordinate design, data entry, and stakeholder input across departments, leading to inefficiencies and silos.

Questions that assume improvement occurred prevent negative responses. Neutral framing (what changed vs how we improved) captures actual impact, including failures.

Open-ended feedback, documents, images, and video sit unused—impossible to analyze at scale.

Traditional qualitative analysis collapses under volume. AI tools like Intelligent Cell process thousands automatically while preserving the insight open-ended questions provide.

Most open-ended questions fail before respondents even start typing.

They're either too vague to produce useful answers or so leading they only confirm what you already believe. "Tell us about your experience" generates rambling responses. "How has our program improved your life?" assumes improvement occurred and only asks people to describe it.

The difference between open-ended questions that produce insight and those that waste time comes down to precision. Good open-ended questions create clear boundaries without constraining thinking. They invite specific examples without leading. They make respondents want to answer, not feel obligated.

This isn't about collecting more data. It's about collecting better data—responses you can actually code, analyze, and turn into decisions. Whether you're designing employee surveys, customer feedback forms, program evaluations, or research studies, the question determines the quality of what you get back.

By the end of this article, you'll see 50+ proven open-ended question examples organized by purpose, understand what separates effective from ineffective questions, know how to adapt templates to your specific context, recognize which question structures produce analyzable responses, and learn when to use open-ended questions instead of closed formats.

Let's start with why most open-ended questions produce unusable data—and how to fix it.

The best open-ended questions share four characteristics that separate insight from noise.

Specificity without constraint. "What happened?" is too broad. "How has our product changed your workflow in the last month—and what would make it more useful?" gives focus while leaving space for unexpected answers. The boundaries help respondents know what you're asking. The openness lets them tell you what matters.

Action-oriented language. "Describe your experience" produces abstractions. "Give an example of a moment when you felt challenged by this process" produces concrete stories. Verbs like describe, explain, give an example, tell us about, and compare invite narrative responses that you can code and analyze.

One clear focus per question. "What did you like and dislike, and how could we improve?" asks three things. Respondents either answer one and ignore the others, or produce scattered responses that are hard to categorize. Split complex questions into separate prompts.

Visible purpose. Respondents answer better when they understand why you're asking. "We're redesigning the onboarding process—what was most confusing when you first started?" signals that their feedback will influence real decisions. Generic questions produce generic answers.

Loaded language. "How has this program benefited you?" assumes benefits exist. Some respondents experienced no benefits or even harm. The question structure prevents honest negative feedback. Better: "What's changed since you joined this program?"

Academic jargon. "Describe your phenomenological experience of organizational culture transformation." Respondents don't think in research terminology. Use plain language: "How has the culture at work changed—and how has that affected your day-to-day?"

Yes/no setups. "Do you have suggestions for improvement?" is technically open-ended but functions like yes/no. Respondents say "yes" or "no" and move on. Better: "What would you change about this process if you could redesign it?"

Vague abstractions. "What are your thoughts on our services?" could mean anything. Quality? Pricing? Availability? Friendliness of staff? Respondents guess at what you want and produce scattered responses. Better: "Which of our services has been most valuable to you—and why?"

The pattern is clear: effective open-ended questions guide focus while preserving flexibility. They make clear what you're asking without determining what respondents can say.

Customer feedback surveys need questions that surface both satisfaction drivers and friction points. Generic "how was your experience?" prompts produce platitudes. Specific questions reveal actionable insight.

What problem were you trying to solve when you chose our product?

Reveals actual use cases vs. what you assumed. Helps prioritize features based on real customer needs rather than designer assumptions.

Describe one task that's easier now than before using our product—and one that's still frustrating.

Balanced framing encourages honest feedback. The "easier" part confirms value. The "frustrating" part surfaces improvement opportunities.

If you could change one thing about our product tomorrow, what would create the most value for you?

Forces prioritization. You get one clear recommendation per respondent instead of wish lists that mix critical and trivial requests.

When did you almost give up on our product—and what made you stay?

Identifies critical retention moments. Understanding friction points and what overcomes them informs both product development and marketing messaging.

Compare our product to the solution you used before. What's better? What's worse?

Competitive context matters. Direct comparison reveals differentiation and gaps that abstract "satisfaction" ratings miss entirely.

Describe your interaction with our support team in enough detail that someone who wasn't there could understand what happened.

Specificity requirement produces usable feedback. Vague "they were helpful" responses don't improve training. Detailed stories do.

What did our team do that exceeded your expectations—or fell short?

Identifies both excellence and failure. The exceeds/falls short frame makes clear you want both positive and negative feedback, not just complaints.

If you were training our customer service team, what's one thing you'd emphasize based on your experience?

Empowering frame. Asking customers to act as trainers produces constructive, actionable feedback rather than venting.

How long did it take to resolve your issue—and how did that timeline affect your perception of our company?

Connects process (resolution time) to outcome (perception). Reveals whether speed matters more or less than quality for your customers.

What almost stopped you from completing your purchase?

Surfaces friction in checkout flows, pricing concerns, or information gaps. Only people who completed purchase can answer—gives you conversion barrier data from successful customers.

What information were you looking for that you couldn't find during sign-up?

Identifies gaps in onboarding content. Better than asking "was onboarding clear?" which produces yes/no responses with no actionable detail.

Describe the moment you understood the value of our product. What triggered that realization?

The "aha moment" determines whether customers stick around. Understanding when and how it happens helps you engineer it for more users.

What would have made your first week using our product more successful?

Retrospective questions work better than asking "what do you need?" in the moment. Users know what they needed once they've experienced the gap.

Employee feedback drives retention, engagement, and productivity. Questions need to feel safe enough for honesty while specific enough to produce change.

What energizes you most about your work here—and what drains you?

Balanced framing signals you want truth, not just positive feedback. Energy/drain language feels less formal than satisfaction ratings.

If you were CEO for a day, what's the first thing you'd change about how we operate?

Empowering hypothetical. Removes hierarchy barriers that prevent honest criticism. Reveals priorities by forcing singular focus.

Describe a recent moment when you felt proud to work here—or frustrated.

Concrete recent examples beat abstract opinions. Stories provide context that helps leadership understand not just what but why.

What would make you more effective at your job without requiring major budget or headcount changes?

Constraint sparks creativity. Unlimited resources produce wishlists. Constraints force prioritization of what actually matters most.

What's one thing we do that other companies should copy—and one they should avoid?

Reveals both strengths and weaknesses through comparison lens. The "others should copy" frame makes positive feedback feel less like flattery.

What's one thing your manager does that helps you succeed?

Specific and positive frame. Identifies coaching behaviors to replicate across managers. More useful than "rate your manager 1-10."

When do you feel most supported by leadership—and when do you feel most alone?

The contrast (most supported / most alone) produces specific situational insight. Helps leadership understand contextual failures, not just overall sentiment.

Describe a decision leadership made that you didn't understand—and what would have helped you understand it.

Non-accusatory framing (you didn't understand vs. they communicated poorly). Focus on what helps vs. what failed reduces defensiveness.

If you could add one hour per week with your manager, how would you use it?

Reveals unmet needs without directly criticizing current cadence. Shows what employees value in manager relationships.

What skill do you want to develop that your current role doesn't exercise?

Identifies skill-building opportunities and potential retention risks. Employees developing new skills elsewhere might be planning departures.

Describe a project or task you wish you could do more of—and one you'd delegate if you could.

Surfaces both engagement drivers (want more of) and energy drains (would delegate). Helps optimize role design.

What's blocking your career growth here—be specific.

"Be specific" matters. Without it, responses stay vague: "limited opportunities." With it, you get actionable barriers: "no clear path from IC to manager in my department."

If you left tomorrow, what would you tell your replacement to prioritize?

Reveals unspoken knowledge and informal processes. Also surfaces what employees think matters vs. what job descriptions emphasize.

What behavior is rewarded here that shouldn't be—and what behavior should be rewarded but isn't?

Reveals gap between stated and actual culture. The "shouldn't be rewarded" half is particularly valuable—identifies toxic patterns leadership might miss.

Describe a time when our values felt real—and a time when they felt like wall art.

Tests value authenticity through specific examples. Abstract "do our values matter?" questions produce defensive responses. Examples reveal truth.

What makes someone successful here—really?

The "really" signals you want honesty beyond official answer. Surfaces informal success factors and political realities.

If a friend asked you what it's really like to work here, what would you say?

Conversational frame reduces corporate-speak. The "friend" context encourages authentic description over polished PR.

Program evaluation requires questions that surface both intended outcomes and unexpected effects. The goal is understanding what worked, what didn't, and why—not just confirming that your theory of change was correct.

What's changed for you since joining this program?

Neutral framing allows positive, negative, or neutral responses. Avoids the loaded "how has this improved your life?" structure that assumes success.

Describe one outcome that demonstrates this program's impact on your life.

Concrete evidence for stakeholder reports. "One outcome" forces prioritization. Stories persuade funders in ways aggregated data can't.

Three months from now, how will you know whether this program was worth your time?

Reveals participant-defined success metrics that might differ from official program goals. Surfaces what actually matters to your population.

Compare where you are now to where you were at program start—what's different?

Direct comparison frame. Helps participants identify changes they might not attribute to the program otherwise.

What outcome surprised you most—something you didn't expect when you started?

Discovers unintended effects. Programs rarely work exactly as designed. Unexpected outcomes often reveal opportunities for design improvements.

What barrier had the biggest impact on your progress—and how did you navigate it?

Surfaces both problems and participant-generated solutions. The "how did you navigate" half reveals adaptation strategies you can formalize and share.

What would have made it easier to participate in this program?

Retrospective questions work better than asking "what do you need?" in real time. Participants know what they needed once they've experienced the gap.

Describe a moment when you almost gave up—what made you continue?

Identifies critical moments in participant journey. Understanding what prevents dropout informs retention strategies.

What support did you expect but not receive?

Reveals misalignment between program promises and delivery. Shows where expectations weren't set correctly or where services fell short.

Which program element was most valuable—and which felt like wasted time?

Component-level feedback for optimization. Balanced frame encourages honest critique, not just gratitude.

What would you change about this program if you could redesign one element?

The "one element" constraint forces prioritization. You get ranked improvements rather than laundry lists.

If you were advising future participants, what would you tell them to prioritize?

Reveals informal success strategies participants developed. Often surfaces practices more valuable than official curriculum.

What did staff do that helped most—and what didn't help even though they tried?

Separates effective from well-intentioned but ineffective support. Improves staff training and resource allocation.

Healthcare and social services need questions that respect vulnerability while producing actionable insight. Overly clinical language creates distance. Overly casual language feels inappropriate. Balance matters.

What made it easier or harder to access our services?

Neutral frame. "Easier" confirms enablers. "Harder" surfaces barriers. Produces both sides without separate questions.

Describe the path you took from deciding you needed help to actually receiving it.

Process mapping through participant experience. Reveals friction points in intake, referral, scheduling, and navigation.

What information were you looking for that you couldn't find?

Identifies communication gaps without asking "was our communication clear?" (which produces defensive yes/no responses).

When did you feel lost in our system—and what would have helped you navigate?

Acknowledges that systems are often confusing. "What would have helped" frames forward, not backward with blame.

Describe your interaction with our team in enough detail that someone who wasn't there could understand what happened.

Specificity requirement produces usable feedback. Vague "they were nice" doesn't improve service. Stories do.

What did our team do that made you feel heard—or dismissed?

Interpersonal effectiveness matters as much as technical competence in human services. This question surfaces both.

Tell me about a moment when you felt respected by our staff—or disrespected.

Dignity is core to service quality. Concrete moments reveal systemic issues or excellence that abstract ratings miss.

If you could change how our staff interacts with clients, what would improve the experience?

Service design feedback from recipient perspective. Often reveals blind spots providers don't see.

What's changed in your life since you started receiving our services?

Neutral outcome question. Allows reporting no change, positive change, or negative unintended consequences.

Describe one way our services affected your daily life—for better or worse.

Concrete example requirement. The "for better or worse" balance encourages honest assessment.

What support made the biggest difference—and what didn't help even though it was provided?

Distinguishes effective from ineffective services. Informs resource reallocation decisions.

If a friend needed similar help, would you recommend our services—why or why not?

Net Promoter Score context but open-ended. The "why or why not" produces explanatory data that numerical scores obscure.

Collecting 500 open-ended responses is easy. Analyzing them is where most teams fail. You have three realistic options: manual coding, AI-powered analysis, or representative sampling.

Traditional qualitative coding works for small samples. Read all responses, identify emergent themes, develop a codebook, apply codes consistently, analyze patterns.

Process:

Limitation: Quality degrades past 200 responses as coder fatigue and drift set in. Two coders improve reliability but double time investment.

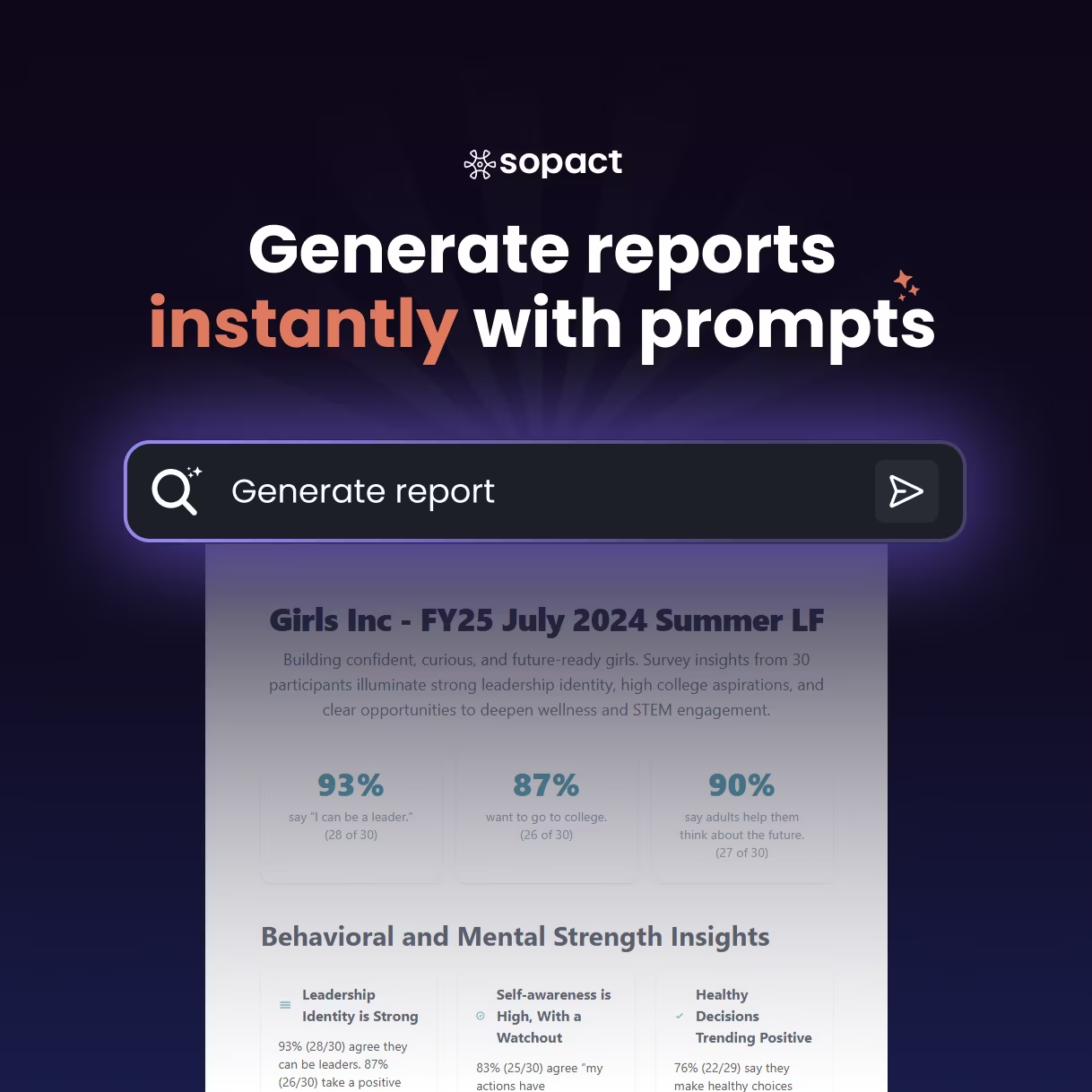

Platforms like Sopact Sense process thousands of open-ended responses automatically using Intelligent Cell. The system extracts themes, measures sentiment, applies deductive coding, and categorizes responses using custom frameworks.

Process:

Advantage: Handles 5,000 responses as easily as 50. Processing happens in minutes, not weeks. Consistency across all responses—no drift or fatigue.

Key capability: Sopact's Intelligent Column aggregates patterns across an entire variable. Ask 500 people "What barrier affected you most?" and Intelligent Column surfaces the top themes automatically: 42% transportation, 38% childcare, 31% technology access, etc.

If you collect 500 responses but can only code 100 manually, sample randomly and analyze that subset. Methodologically sound as long as you're transparent about sampling in reports.

Process:

Trade-off: Lose granularity but maintain analytical rigor. Better than skimming 500 responses without systematic coding.

Bad: "What did you like and dislike about the program, and how could we improve?"

This asks three things. Respondents answer one part and ignore others, or produce disorganized responses that jump between topics.

Fix: "What would you change about this program if you could redesign one element?"

Single focus. Forces prioritization. Produces cleaner responses that are easier to code.

Bad: "How has our program improved your outcomes?"

Assumes improvement occurred. Respondents who didn't improve can't answer honestly without contradicting the question's premise.

Fix: "What's changed in your outcomes since joining this program?"

Neutral. Allows positive, negative, or no change responses.

Bad: "What do you think about our organization?"

Too broad. Could mean anything: services, staff, facilities, pricing, impact, reputation. Responses scatter across dimensions.

Fix: "What's one thing our organization does better than others—and one thing we should improve?"

Specific comparative frame. Bounded scope. Produces focused feedback.

Bad: "Do you have any suggestions for improvement?"

Technically open-ended but functionally closed. Most people answer "yes" or "no" without elaborating.

Fix: "What's one change that would make our service more valuable to you?"

Assumes suggestions exist. Asks directly for the content rather than whether content exists.

Bad: "Describe your phenomenological experience of organizational culture transformation."

Respondents don't think in research terminology. Question creates confusion and discourages response.

Fix: "How has the culture here changed—and how has that affected your day-to-day work?"

Plain language. Concrete focus. Accessible to all education levels.

Bad: Question 1: "What worked?" Question 2: "What didn't work?" Question 3: "What would you change?"

These feel repetitive to respondents even though they're distinct analytically. Response quality drops on questions 2 and 3.

Fix: "Which program element was most valuable—and which felt like wasted time?"

Combined frame.

Not every insight requires open-ended questions. Sometimes closed formats work better. The decision depends on what you'll do with the data.

Discovery and exploration. You don't know what matters yet, so you can't provide meaningful answer choices. Pilot surveys, exploratory research, and initial needs assessment all demand open-ended questions.

Causation and mechanism. Numbers show that satisfaction dropped 15%. Open-ended responses explain why: "The new process doubled my workload without training." Closed questions identify change. Open questions explain it.

Evidence and stories. Stakeholders need proof. "92% reported improved confidence" is a claim. "I negotiated a 20% raise using Module 3 skills" is evidence. Both matter, but stories persuade.

Context and nuance. Reality doesn't always fit predetermined categories. Closed questions force people into your boxes. Open questions let them describe complexity.

Validation before scaling. Before creating multiple-choice options, run open-ended pilots. If 80% mention transportation barriers and nobody mentions time constraints, your planned closed-ended options need revision.

Quantification and comparison. "Rate confidence 1-10" produces numbers you can average, track, and compare. Open descriptions of confidence can't generate those metrics.

Hypothesis testing. If you believe peer support drives retention, closed questions test it at scale. "Did you participate in peer learning?" paired with retention data proves or disproves the relationship.

Trend tracking. Ask the same closed question quarterly and you get trendlines. Confidence at 5.2, then 6.8, then 7.4 shows clear progress. Open-ended responses vary too much structurally to track reliably.

Large sample efficiency. Analyzing 5,000 closed responses takes minutes. Analyzing 5,000 open responses takes AI tools or weeks of manual coding. When scale matters without sophisticated analysis tools, closed wins.

Reducing respondent burden. Clicking takes seconds. Writing takes minutes. When time is limited or survey length matters, closed questions respect constraints.

Most effective surveys combine both strategically:

Pattern: Closed question → Open follow-up

"Rate satisfaction 1-10" → "What most influenced your rating?"

You get quantifiable metrics plus context.

Pattern: Open pilot → Closed scale survey

Pilot: "What barriers did you face?" (open)

Main survey: "Which barriers affected you?" (closed, based on pilot themes)

Grounds categories in real data, then quantifies at scale.

Pattern: Conditional open questions

"Did you face barriers? (Yes/No)" → If Yes: "Describe the barrier that had biggest impact"

Only people affected provide details, reducing burden on others.

The difference between open-ended questions that produce insight and those that waste time comes down to three elements: specificity, framing, and purpose.

Specificity creates boundaries without constraining thinking. "What changed?" is too broad. "What's changed in your confidence since starting this program—and what drove that change?" gives focus while preserving flexibility. Respondents know what you're asking. You get responses you can code.

Framing signals what's welcome. "How has this improved your life?" allows only positive responses. "What's changed since you joined?" allows positive, negative, or neutral. Neutral framing produces honest data. Leading framing produces social desirability bias.

Purpose guides design. Questions that exist to fill survey slots produce noise. Questions that serve specific analytical needs produce insight. Before writing any open-ended question, ask: What will I do with responses? How will this inform decisions?

The templates in this article work because they've been tested across thousands of surveys. But templates are starting points, not finished questions. Adapt them to your context. Test them with small groups. Refine based on the quality of responses you get.

Most importantly: Only ask open-ended questions you can analyze. Whether through manual coding, AI tools like Sopact's Intelligent Cell, or representative sampling, you need a plan for turning responses into insight. Collecting 500 open-ended responses and never analyzing them wastes 500 people's time.

Good open-ended questions make respondents want to answer. They create clarity without control. They invite stories without leading. They produce data that changes what you do, not just what you report.

That's the difference between questions that extract and questions that explore. One treats respondents as data sources. The other treats them as partners in discovery. Choose accordingly.

Open-Ended Questions: Common Questions

Answers to the most frequent questions about writing, using, and analyzing open-ended survey questions.

Q1. How long should responses to open-ended questions be?

Don't specify required length—it constrains thinking and encourages padding. Instead, design questions that naturally elicit the detail level you need. Specific questions ("Describe one moment when...") produce focused responses. Vague questions ("Tell us your thoughts...") produce rambling ones.

Most valuable responses run 2-5 sentences or 50-150 words. Anything shorter lacks sufficient detail. Anything longer often wanders off topic. Your question structure determines this more than any instruction you provide.

If you're getting one-word responses, your question is too broad or feels too optional. If you're getting paragraphs of unfocused writing, your question lacks boundaries. The fix is question design, not word counts.

Best practice: Test questions with 3-5 people before full deployment. Their response length tells you whether your question structure works.Q2. Should I make open-ended questions required or optional?

Make them optional unless the response is critical to your primary research question. Required open-ended questions increase survey abandonment significantly because some respondents genuinely don't have thoughtful answers or don't have time to write them.

Optional fields still generate valuable data. Typically 30-50% of respondents answer optional text fields, and those responses often come from your most engaged participants—exactly the people whose detailed feedback matters most.

One exception works well: conditionally required follow-ups. If someone rates satisfaction as 1-2 (very dissatisfied), requiring "What drove your rating?" makes sense because you need to understand critical failures. This maintains high completion while getting detail where it matters.

Rule of thumb: Required closed-ended questions for comparable data across everyone. Optional open-ended questions unless response is essential to core research question.Q3. What's a good example of an open-ended question for customer feedback?

Strong example: "Describe one moment when our product made your work easier—or harder." This works because it has specific focus (work impact), asks for concrete examples (one moment), and uses balanced framing (easier or harder) that encourages honest feedback.

Weak example: "Tell us about your experience with our product." Too vague. "Experience" could mean purchase process, product quality, customer service, pricing, or packaging. Responses scatter across dimensions, making systematic analysis nearly impossible.

The pattern that works: Start with action verb (describe, explain, compare), add specificity (one moment, biggest impact, most valuable), include balanced frame (easier/harder, helpful/unhelpful, worked/didn't work), and connect to outcomes (made work easier, affected your decision, changed your process).

Test by asking: Could 100 people interpret this question 100 different ways? If yes, add specificity. Could respondents only give positive answers? If yes, balance the frame.Q4. How do I analyze open-ended responses without spending weeks coding manually?

You have three realistic approaches: limit response volume, use AI-powered analysis, or analyze a representative sample.

Manual coding works for up to 200 responses before quality degrades from coder fatigue. If you're coding by hand, either keep samples small or limit open-ended questions to 1-2 per survey so total volume stays manageable.

AI-powered platforms like Sopact Sense change the equation completely. Intelligent Cell processes thousands of open-ended responses automatically—extracting themes, measuring sentiment, applying deductive coding, and categorizing responses. What took weeks happens in minutes, with human review for quality rather than starting from scratch.

If you must code manually but collected 500+ responses, analyze a randomly selected sample of 100-150. This is methodologically sound as long as you're transparent about sampling in reports. Better to thoroughly analyze a subset than superficially skim everything.

Critical rule: Don't collect open-ended responses you can't analyze. That wastes respondent time and produces data you'll ignore. Match question volume to your actual analysis capacity.Q5. What makes a good open-ended question for employee engagement surveys?

Strong example: "What energizes you most about your work here—and what drains you?" This works because it uses balanced framing (energizes/drains), feels less formal than satisfaction ratings, and produces both positive and negative feedback in context of each other.

Another strong example: "If you were CEO for a day, what's the first thing you'd change?" The empowering hypothetical removes hierarchy barriers that prevent honest criticism. The "first thing" constraint forces prioritization rather than wish lists.

Weak example: "What are your thoughts on workplace culture?" Too abstract. "Culture" means different things to different people, and "thoughts" produces scattered responses across 20 dimensions. Better to ask about specific, observable behaviors: "What behavior is rewarded here that shouldn't be—and what should be rewarded but isn't?"

The key to employee questions is creating safety for honesty while maintaining specificity. Abstract questions feel safer but produce useless data. Specific questions with empowering or hypothetical frames ("if you were advising a friend," "if you could redesign") balance truth with psychological safety.

Test questions by asking yourself: Would I answer this honestly if my manager could read my response? If not, adjust framing to create more psychological distance.Q6. Can I use open-ended questions for program evaluation at scale?

Yes, but only if you have AI-powered analysis tools or can accept analyzing a sample rather than all responses. Traditional manual coding doesn't scale past 200 responses realistically.

For programs serving 500+ participants, use this approach: Pair every critical quantitative metric with one open-ended follow-up. "Rate confidence 1-10" followed by "What factors most influenced your rating?" This gives you both trackable numbers and interpretable context.

Process open-ended responses through AI tools like Sopact's Intelligent Column, which aggregates patterns across all responses automatically. If 500 people answer "What barrier affected you most?", Intelligent Column surfaces dominant themes: 42% transportation, 38% childcare, 31% technology access, etc.—without manual coding.

The evaluation power comes from integration: use closed-ended questions to establish what changed (confidence increased 2.1 points average), then use open-ended responses to understand why it changed (participants cite peer networks and mentor support as primary drivers).

Scale isn't the barrier anymore—analysis capacity is. With the right tools, open-ended questions work as well at 5,000 participants as at 50. Without those tools, keep open-ended questions minimal or accept sampling approaches.