New webinar on 3rd March 2026 | 9:00 am PT

In this webinar, discover how Sopact Sense revolutionizes data collection and analysis.

Learn how to improve customer experience with clean data collection, AI-powered analysis, and continuous feedback workflows that eliminate deduplication.

Turn fragmented feedback into continuous learning—without the months-long analysis cycle.

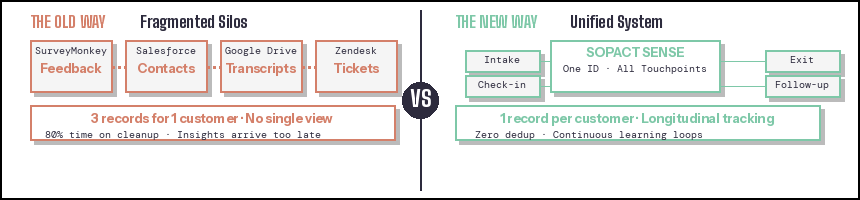

Most teams collect customer feedback they can never use when it matters. Surveys live in one tool, CRM data sits in another, qualitative responses pile up unread—and by the time insights arrive, customer needs have already shifted. The result? Three disconnected records for a single customer and no way to see the full journey.

This fragmentation isn't just an inconvenience—it's a structural failure that compounds over time. Teams spend 80% of their effort cleaning and reconciling data instead of acting on it. Analysts waste weeks preparing spreadsheets for analysis instead of finding patterns that improve experiences. Open-ended responses, the richest source of "why" behind every satisfaction score, remain buried because manual coding takes too long. And quarterly reports describe problems you could have fixed in week one.

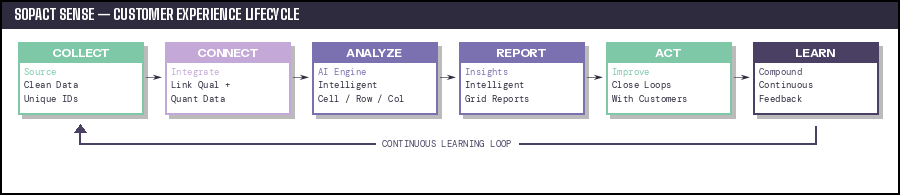

Sopact Sense eliminates this cycle entirely. By assigning unique IDs at first contact, connecting qualitative stories with quantitative metrics at the source, and applying AI-powered analysis (Intelligent Cell, Row, Column, and Grid) in real time, it transforms customer feedback from a static reporting exercise into a continuous learning system. Every touchpoint connects. Every open-ended response becomes measurable. Every stakeholder gets live insights instead of stale PDFs.

The impact is immediate and measurable: analysis cycles drop from 6–8 weeks to minutes, manual cleanup shrinks by 90%, review bottlenecks compress from 12 weeks to 3, and teams finally understand the "why" behind NPS shifts—driving an 18-point improvement through targeted action instead of guesswork.

This article walks you through exactly how to design customer experience systems that keep data clean at the source, shorten analysis from months to minutes, and make every customer voice measurable from day one.

See how it works in practice:

Different data collection tools create information silos that never connect. Customer feedback lives in SurveyMonkey. Contact details sit in Salesforce. Interview transcripts pile up in Google Drive. Service tickets exist in Zendesk. Each system generates its own ID structure, making it nearly impossible to track the same customer across touchpoints.

When a customer submits feedback through three channels, you get three disconnected records. No single view. No way to measure journey-level patterns. Analysis becomes an archaeological dig through spreadsheets, manually matching names and guessing which "John Smith" is which.

This fragmentation doesn't just waste analyst time. It actively prevents the insights that matter most: understanding how experiences evolve, identifying which touchpoints drive satisfaction, connecting what customers say with what they actually do.

Even when teams export data from multiple sources, the real work begins: deduplifying records, standardizing formats, fixing typos, filling gaps. Analysts spend weeks preparing data for analysis instead of finding patterns that improve experiences.

Traditional survey platforms don't prevent duplicates at collection. They don't validate entries in real time. They don't maintain unique IDs that persist across forms. The burden falls entirely on your team—after the damage is done.

Surveys capture numbers easily but miss the story. Why did NPS drop? What's causing churn? Which features matter most? The answers live in open-ended responses that most teams never analyze at scale.

Sentiment analysis tools exist, but they're shallow—labeling feedback as "positive" or "negative" without extracting actionable themes. Large inputs like interview transcripts, support documents, or detailed feedback forms remain completely untouched. The richest insights stay buried because analyzing them manually takes too long.

By the time quarterly reports arrive, the experiences they describe are ancient history. Customers who struggled three months ago have already churned or adapted. Problems that could have been fixed in week one persist through entire quarters because feedback loops move too slowly.

Traditional approaches treat customer experience measurement as episodic: collect data, close the survey, spend weeks analyzing, present findings, plan changes, repeat next quarter. Meanwhile, customer expectations evolve daily.

Clean data collection isn't about having pristine spreadsheets. It's about designing feedback systems where accuracy, connection, and analysis-readiness are built into the collection process itself—not fixed afterward.

Instead of generating a new record every time someone submits feedback, clean systems assign each customer a persistent unique ID from first contact. That same ID follows them through intake surveys, mid-program check-ins, exit feedback, and follow-up touchpoints.

When a customer updates their information, you're correcting the original record—not creating duplicates. When you compare pre and post responses, you're tracking actual individuals—not guessing from names and emails. This eliminates deduplication work entirely and makes longitudinal analysis automatic.

Traditional surveys feel like interrogations: "Tell us everything right now, then we'll disappear for six months." Clean systems treat feedback as ongoing conversation, where customers can update responses, add context, or flag issues as experiences unfold.

Each submission gets a unique link that allows corrections and additions without creating new records. Customers who initially provide incomplete information can return and fill gaps. Teams can follow up on specific responses without sending entirely new surveys.

This continuity transforms data quality. Instead of accepting whatever information arrives in one-time submissions, you maintain living records that improve over time.

Most platforms force a choice: capture structured metrics or collect open-ended stories. Clean systems integrate both from the start, linking every numeric rating with the narrative that explains it.

When a customer rates satisfaction as 3/10 and explains why in an open text field, those data points share the same unique ID. You can analyze satisfaction scores, then immediately surface the stories behind low ratings—without switching tools or manually matching records.

This integration makes mixed-methods analysis effortless. You're not exporting data from surveys, transcripts from interviews, and metrics from CRM, then spending weeks aligning them. Everything connects because it was collected as a unified system.

Once data stays clean and connected at collection, AI can extract insights that traditional analytics miss entirely—transforming feedback from static reports into continuous learning loops.

Not every insight needs a multiple-choice question. Open-ended responses like "How has this program changed your confidence?" contain rich information that survey scales can't capture. Intelligent Cell extracts structured insights from unstructured text in real time.

Instead of manually reading through 500 responses to identify themes, Intelligent Cell processes each submission as it arrives—categorizing confidence changes, identifying specific skill gains, flagging concerns that need follow-up. The analysis appears instantly alongside the original response, ready for reporting without any manual coding.

This transforms qualitative data from "nice to have context" into measurable metrics. You can track how many customers mention specific pain points, measure sentiment shifts over time, or identify which features drive satisfaction—all from open text that previously required weeks of manual analysis.

Customer experience rarely fits into single touchpoints. Understanding satisfaction means seeing the full arc: initial expectations, actual experiences, evolving needs, final outcomes. Intelligent Row synthesizes multiple data points into plain-language summaries of each customer's journey.

When evaluating program effectiveness, instead of manually reading through pre, mid, and post surveys for each participant, Intelligent Row generates summaries like: "Entered with low technical confidence, showed significant skill growth during training, landed employment within target timeline, noted support quality as primary success factor."

These summaries don't replace detailed data—they make it navigable. You can quickly identify patterns across hundreds of customer journeys, then drill into specific cases that represent trends or exceptions.

Individual feedback matters, but improvement requires understanding what's common across many customers. Intelligent Column aggregates responses to surface themes that appear repeatedly—identifying the frequent barriers, consistent pain points, or common success factors that drive overall experience quality.

When analyzing "What was your biggest challenge?" across 200 customers, Intelligent Column identifies that 45% mention unclear onboarding, 30% cite lack of ongoing support, and 25% flag technical complexity. These patterns become immediately visible without manual theme coding or weeks of analysis.

This capability extends beyond simple word clouds or frequency counts. Intelligent Column understands context, recognizes synonyms, and groups related concepts—providing the depth of qualitative analysis at the scale of quantitative metrics.

Analysis doesn't improve experiences—action does. Intelligent Grid transforms collected data into designer-quality reports that stakeholders can actually use, generated in minutes instead of weeks.

Instead of exporting spreadsheets, creating pivot tables, building charts, and formatting presentations, you describe what story the data should tell in plain English: "Show how customer satisfaction changed from intake to completion, highlight key success factors, identify remaining barriers, include representative quotes."

Intelligent Grid processes all connected data—quantitative metrics, qualitative themes, demographic patterns, longitudinal changes—and generates a comprehensive report formatted for stakeholders. The report includes visualizations, narrative summaries, specific recommendations, and supporting evidence, ready to share immediately.

More importantly, these reports stay live. As new feedback arrives, insights update automatically. There's no "analysis freeze" while you prepare quarterly reports. Stakeholders see current patterns, emerging trends, and real-time progress.

The goal isn't better reports. It's creating feedback loops that actually close—where customer input drives immediate improvement, improvements get tested with real customers, and learning compounds over time.

Before launching any survey, establish a lightweight customer object that assigns unique IDs from first contact. This doesn't require enterprise CRM complexity—a simple contact database that tracks: name, contact information, customer segment, key dates, and that persistent unique ID.

Every subsequent interaction references this ID. Intake surveys, satisfaction check-ins, service interactions, and follow-up touchpoints all connect to the same customer record. You're building a unified view from day one instead of trying to reconstruct it later.

Replace standalone surveys with connected feedback workflows. Each touchpoint should:

This connection transforms isolated data points into continuous customer stories. You're not comparing "Group A at Time 1" with "Group B at Time 2"—you're tracking how specific individuals experience change over time.

Don't limit open-ended questions to "Additional comments?" fields that most customers ignore. Embed them strategically:

Then configure Intelligent Cell to extract structured insights from these responses automatically. You get the depth of qualitative research at the scale of quantitative surveys—without manual coding overhead.

Analysis shouldn't wait until data collection ends. Configure Intelligent Column to identify emerging patterns as feedback arrives, set thresholds for automated alerts when satisfaction drops or specific issues spike, create Intelligent Grid reports that update continuously as new responses come in, and enable stakeholders to explore live dashboards instead of static PDFs.

This shift from episodic reporting to continuous monitoring changes how teams respond to customer feedback. Problems surface while there's still time to fix them. Improvements get validated immediately. Learning accumulates instead of resetting each quarter.

The ultimate test of clean data collection: can you return to specific customers to follow up on their feedback? With unique links and centralized records, you can:

This responsiveness transforms customer perception. Feedback doesn't disappear into a void—it visibly influences experiences. That shift from "we collected your input" to "we acted on what you told us" builds trust that episodic surveys never achieve.

Organizations implementing clean data collection with AI-powered analysis see fundamental shifts in how they improve customer experiences.

A workforce development nonprofit previously spent 6-8 weeks per cohort manually analyzing pre/post surveys, interview transcripts, and employment outcomes. They could report whether participants got jobs, but couldn't explain why some succeeded while others struggled.

After implementing connected feedback workflows with Intelligent Suite, they track individual skill trajectories in real time. Intelligent Cell extracts confidence measures from open-ended responses automatically. Intelligent Column identifies which training elements drive strongest outcomes. Intelligent Grid generates progress reports that funders can access anytime.

Analysis time dropped from weeks to minutes. More importantly, program staff now identify struggling participants during training instead of discovering issues in post-program reviews. Interventions happen when they still matter.

A foundation reviewing 300+ scholarship applications annually spent months manually evaluating submissions against rubric criteria. Different reviewers interpreted guidelines inconsistently, creating bias and slowing decisions.

With Intelligent Row processing each application against standardized evaluation criteria, initial reviews happen instantly. Staff focus on nuanced decisions instead of repetitive scoring. Intelligent Column identifies common strengths across top candidates and flags applications that need additional human review.

Review cycles shortened from 12 weeks to 3 weeks. Consistency improved because AI applies rubrics uniformly. Most importantly, faster decisions mean students know scholarship status in time to plan their academic year.

An enterprise software company tracked NPS religiously but never understood what drove score changes. When NPS dropped, they had numbers but no narrative. Improvements felt like guesswork.

By connecting satisfaction surveys with support ticket data and using Intelligent Column to analyze "What influenced your rating?" responses, patterns became clear: onboarding quality predicted long-term satisfaction, response speed mattered less than solution completeness, and documentation gaps frustrated customers more than product bugs.

These insights drove targeted improvements: enhanced onboarding workflows, better knowledge base content, proactive check-ins during implementation. NPS improved 18 points over six months because the team finally knew which experiences to enhance.

Even organizations committed to better customer experiences make structural mistakes that prevent data from driving action.

The most expensive mistake: collecting feedback in disconnected tools, planning to "bring it all together later" in analysis. By that point, you're stuck guessing which records match, losing context about customer journeys, and spending analyst time on data janitorial work instead of insight generation.

Fix this: Establish unique customer IDs before any feedback collection starts. Make connection automatic, not a post-collection cleanup task.

Many teams collect open-ended responses but only analyze them superficially—or not at all. They report quantitative metrics in dashboards, relegating stories to "selected quotes" that someone manually picked.

This misses the point. Customers explain why experiences succeed or fail in open text. Those explanations contain the insights that drive improvement. Without analyzing them systematically, you're measuring outcomes without understanding causes.

Fix this: Configure Intelligent Cell to extract structured insights from every qualitative response automatically. Make themes and patterns as measurable as numeric scores.

One-time surveys create one-way streets. Customers provide feedback, then never hear what happened with it. Teams can't ask clarifying questions. Incomplete responses stay incomplete forever.

This prevents both data quality and relationship quality from improving. Customers disengage when feedback feels like shouting into voids. Teams miss chances to understand nuance.

Fix this: Generate unique links for every submission that allow customers to update, expand, or clarify their responses. Use these links to close loops—sharing how feedback drove changes, requesting additional detail, acknowledging input.

Looking at satisfaction scores without demographic context, feedback themes without longitudinal patterns, or individual complaints without seeing how common they are leads to misguided improvement priorities.

Customer experience is inherently multidimensional. Understanding it requires connecting quantitative metrics with qualitative stories, comparing experiences across customer segments, tracking changes over time, and seeing individual journeys in the context of aggregate patterns.

Fix this: Use Intelligent Grid to analyze multiple dimensions simultaneously—generating reports that synthesize metrics, themes, demographics, and time trends in unified narratives.

The traditional customer experience cycle—collect data quarterly, analyze for weeks, present findings, plan improvements, repeat—no longer matches the pace of customer expectations or market change.

Clean data from collection: Unique IDs, connected touchpoints, continuous feedback workflows that maintain accuracy without post-collection cleanup.

Real-time analysis: Intelligent Suite processing feedback as it arrives, surfacing patterns immediately instead of waiting for collection to close.

Live reporting: Dashboards and reports that update continuously as new data flows in, eliminating analysis freezes and stale insights.

Rapid experimentation: Ability to test improvements quickly, measure impact immediately, and iterate based on customer response rather than waiting for next year's study.

Continuous learning culture: Teams that treat customer feedback as ongoing conversation, expect insights to evolve as experiences change, and make improvement a constant practice rather than periodic project.

This shift doesn't just accelerate improvement cycles. It fundamentally changes what's possible—moving from "How were experiences last quarter?" to "How are experiences right now, and what's emerging?"

Organizations that implement always-on customer experience systems see benefits compound over time.

Year one: Analysis cycles shorten from months to minutes. Teams act on feedback faster. Customer responsiveness improves.

Year two: Historical data enables longitudinal analysis. You're not just measuring current satisfaction—you're understanding how experiences evolve, which interventions work over time, and what predicts long-term success.

Year three: Machine learning models trained on your accumulated data predict which customers need proactive support, which experiences drive retention, and which improvements will matter most. AI doesn't just analyze past feedback—it anticipates future needs.

This compounding is only possible when data stays clean, connected, and continuous from the start. Fragmented collection, manual cleanup, and episodic analysis prevent the accumulation that makes advanced capabilities possible.

Improving customer experience doesn't start with better analytics, more sophisticated dashboards, or additional headcount. It starts with collecting data in ways that make analysis effortless and improvement continuous.

When feedback stays fragmented across tools, teams waste months reconciling records that should have connected from the start. When qualitative insights remain trapped in unanalyzed text, organizations miss the explanations that drive meaningful change. When analysis cycles lag weeks behind customer needs, improvements address problems that have already evolved.

Clean data collection with AI-powered analysis solves these structural problems. Unique IDs eliminate deduplication work. Connected workflows make longitudinal tracking automatic. Intelligent Suite extracts themes from open-ended responses in real time. Continuous reporting turns insights from periodic events into always-on intelligence.

The organizations seeing strongest customer experience improvements aren't those with the biggest analytics teams or most expensive BI tools. They're the ones that built feedback systems where data quality, connection, and analysis-readiness are automatic—not added afterward.

Start with centralized customer records that assign unique IDs from first contact. Design feedback touchpoints that connect through those IDs instead of creating isolated submissions. Integrate qualitative capture everywhere and configure Intelligent Cell to extract insights automatically. Establish Intelligent Grid reporting that updates continuously as new feedback arrives. Most importantly, close loops with customers directly—showing that their input drives visible improvements.

This isn't incremental optimization of existing processes. It's a fundamental shift from treating customer feedback as something you analyze after the fact to building systems where learning is continuous, insights compound over time, and improvement never stops.

The choice isn't whether to collect customer feedback—you're already doing that. The choice is whether that feedback will keep fragmenting across disconnected tools while analysts spend 80% of their time on cleanup, or whether it will flow through clean systems that turn every customer voice into measurable, actionable insight the moment it arrives.

Frequently Asked Questions About Improving Customer Experience

Common questions about building customer experience systems that actually drive improvement.

Q1. How is clean data collection different from just using survey software?

Traditional survey software captures individual submissions but doesn't maintain unique customer identities across multiple touchpoints, prevent duplicates at the source, or integrate qualitative and quantitative data within unified records. Clean data collection means every customer gets a persistent unique ID from first contact, all subsequent feedback connects to that same record automatically, and corrections update existing data instead of creating duplicates. This eliminates the 6-8 weeks most teams spend reconciling customer IDs and deduplicating records after collection ends.

Q2. Why do traditional CX tools fail at qualitative analysis?

Most survey platforms offer basic sentiment analysis that labels feedback as positive, negative, or neutral—useful for high-level trends but useless for understanding specific issues. They can't process complex inputs like interview transcripts, lengthy open-ended responses, or uploaded documents. AI-powered qualitative analysis through Intelligent Cell changes this completely: it extracts themes, measures their frequency, tracks how they evolve over time, and connects them automatically with associated metrics—all in real time as feedback arrives.

Q3. How quickly can teams actually see improvement from better data collection?

The timeline splits into immediate and compounding benefits. Immediate improvements appear within weeks: analysis cycles that previously took 6-8 weeks drop to minutes, deduplication work disappears entirely, and stakeholders access current insights instead of stale quarterly reports. Compounding benefits emerge over 6-12 months as clean, continuous data accumulates: longitudinal analysis becomes possible, you understand how experiences evolve over customer lifetimes, and patterns that predict success or churn become visible.

Q4. Can small teams implement this without technical expertise?

Yes, because the complexity is in the platform architecture rather than user configuration. Small teams don't need to hire data engineers, learn SQL, or manage integrations between multiple tools. Platforms designed for clean data collection handle unique ID management automatically, connect forms and contacts without manual linking, and process qualitative analysis through plain-English instructions rather than code.

Q5. How do you maintain data quality as volume scales?

Clean systems build quality into collection through automated validation that prevents errors before they're recorded, unique IDs that eliminate duplicates entirely, continuous workflows where customers correct their own data, and AI processing that maintains analysis consistency regardless of volume. Quality actually improves with scale because automated systems don't get tired, distracted, or inconsistent.

Q6. What makes continuous learning different from ongoing surveys?

Ongoing surveys still treat feedback as episodic if each survey creates disconnected records. Continuous learning means every new data point enriches existing understanding rather than starting over. Customers aren't re-surveyed from scratch—they update evolving records. Analysis doesn't wait for collection to finish—patterns surface as feedback arrives. Reports don't become obsolete quarterly—they update automatically with new information.