New webinar on 3rd March 2026 | 9:00 am PT

In this webinar, discover how Sopact Sense revolutionizes data collection and analysis.

Compare survey data collection platforms with centralized databases, automatic deduplication, and real-time mixed-method insights.

Most teams collect feedback they can't use until it's too late to matter. Traditional survey tools create a fatal disconnect: intake forms, mid-program surveys, exit questionnaires, and follow-ups each live in separate silos with no shared identity layer. By the time you've exported, cleaned, deduplicated, and reconciled responses across three or four tools, the program has already moved forward—blind to what's actually working or failing.

The cost of this fragmentation is staggering. Evaluation teams routinely spend 80% of their time on data cleanup rather than analysis. Program managers make decisions based on incomplete pictures because connecting qualitative feedback with quantitative scores requires manual effort most teams simply don't have bandwidth for. Stakeholder stories remain anecdotal—locked in open-ended text fields that no one has time to code, theme, or quantify. The gap between "data collected" and "insights delivered" is where most real-time survey platforms quietly

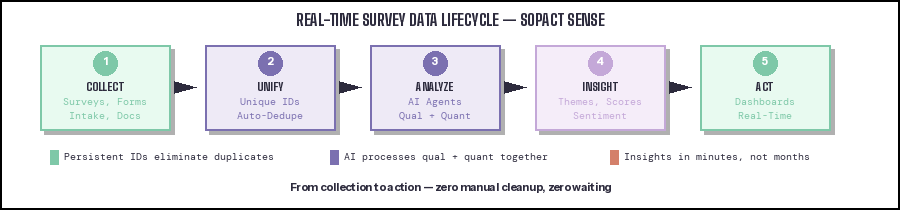

Sopact Sense closes that gap with an AI-native architecture designed to keep data clean, connected, and analysis-ready from the moment of collection. Every respondent receives a persistent unique ID that links intake, mid-program, and exit responses into a unified record—automatically. AI agents process qualitative and quantitative data streams together, extracting themes, sentiment, confidence scores, and custom rubric evaluations in minutes instead of months. There's no export-import cycle, no manual coding backlog, and no waiting for a data team to configure dashboards before insights emerge.

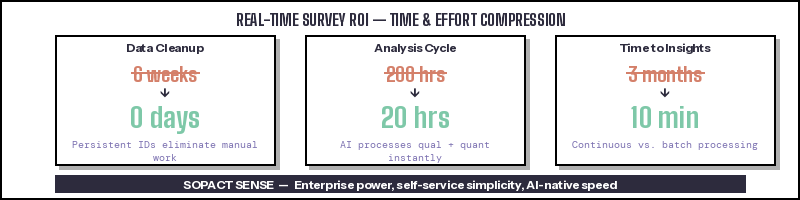

What once required six weeks of data cleanup now takes zero. Analysis cycles that consumed 200 hours shrink to 20. And insights that arrived three months after a program ended now surface in minutes while the program is still running—giving teams the continuous feedback loop they need to adapt, improve, and demonstrate impact in real time.

See how it works in practice:

A centralized survey data collection platform stores all participant responses—across all forms and time periods—in a single unified database organized around individual identities rather than individual surveys.

In traditional survey tools, every form creates its own isolated dataset. An intake survey generates one spreadsheet. A satisfaction survey creates another. A follow-up assessment produces a third. If the same participant completed all three, their responses exist in three separate locations with no automatic connection between them.

A centralized platform inverts this architecture. Instead of organizing data by survey, it organizes data by participant. Each person receives a persistent unique identifier from their first interaction. Every subsequent survey, feedback form, or assessment they complete writes data to the same contact record. The result is a single source of truth where one participant equals one row, regardless of how many surveys they've completed.

This distinction matters for three practical reasons:

Data integrity at the structural level. When unique identifiers enforce one-record-per-person at the database level, duplicates become structurally impossible rather than something you detect and fix after the fact. There are no matching algorithms to configure, no fuzzy name-matching to trust, and no manual reconciliation to perform.

Longitudinal tracking without custom engineering. Connecting a participant's baseline scores to their mid-program feedback to their exit assessment happens automatically because all data lives under the same contact ID. You don't need to build custom data pipelines or hire a data engineer to merge spreadsheets.

Cross-survey analysis in real time. Because responses from different surveys share the same underlying record, you can immediately analyze how intake characteristics correlate with outcomes, which program elements predict success, or where different participant segments diverge—without exporting, merging, or waiting.

Organizations working with centralized survey databases consistently report spending 80% less time on data preparation and getting to actionable insights in days instead of months. The efficiency gain doesn't come from faster forms—it comes from eliminating the cleanup work that traditional architectures create.

Duplicate survey responses create silent damage. They inflate response counts, distort averages, compromise longitudinal analysis, and waste hours of staff time on reconciliation work that produces uncertain results.

Traditional deduplication methods are reactive: they detect duplicates after they've already entered the system, then attempt to resolve them through matching algorithms. Cookie-based deduplication catches repeat submissions from the same browser but misses respondents who use different devices. IP-based deduplication blocks shared networks where multiple legitimate respondents share an address. Name-matching algorithms struggle with variations, typos, and common names.

Centralized platforms take a fundamentally different approach: they prevent duplicates from being created in the first place.

Step 1: Contact record first, survey second. Before any survey is sent, every participant exists as a unique contact record in the centralized database. This contact holds a persistent unique identifier—a system-generated ID that never changes.

Step 2: Survey links tied to identity. Each participant receives a personalized survey link that maps directly to their contact ID. When they submit a response, the data writes to their existing record rather than creating a new one.

Step 3: Database-level enforcement. The unique identifier serves as the primary key in the database. The system structurally cannot create a second record for the same person, regardless of how many times they click the link, whether they use different devices, or if their name or email changes between surveys.

The practical difference:

Traditional approach: 500 survey submissions → 120 appear to be duplicates → analyst spends 40 hours matching names and emails → 437 unique individuals identified (uncertain accuracy) → analysis begins 3 weeks late.

Centralized approach: 500 survey submissions → 437 unique contact IDs → 0 hours deduplicating → 437 unique individuals confirmed with certainty → analysis begins immediately.

Beyond eliminating duplicates, persistent unique links enable participant self-correction. When a respondent clicks their unique link after already submitting, they see their previous responses and can update or correct them. The system records the correction to the same contact record. No new records. No duplicate entries. Just cleaner, more accurate data maintained by the people who know it best.

Data integrity means that information remains accurate, consistent, and reliable throughout its entire lifecycle—from the moment a participant enters a response to the point where a decision-maker acts on an insight. In survey data collection, integrity failures are common, costly, and frequently invisible until analysis is already underway.

Example 1: Entity Integrity — One Person, One Record

A workforce development program surveys 300 participants at intake and again at program completion. Using traditional tools with generic survey links, 47 participants submit twice at intake (confusion about whether their first attempt went through), 23 have name variations across surveys ("José Ramirez" vs "Jose Ramirez"), and 8 changed email addresses between surveys. The analyst discovers 78 potential duplicates requiring manual review. After 30 hours of reconciliation, confidence in the final count remains uncertain.

With persistent unique identifiers, the same program produces exactly 300 contact records across both survey waves. Each participant's intake and completion data links automatically. Zero reconciliation required, 100% confidence in participant-level change scores.

Example 2: Referential Integrity — Connected Data Across Touchpoints

A scholarship program collects applications (survey 1), interview scores (survey 2), and post-award feedback (survey 3). In disconnected tools, each dataset uses different identifiers: application numbers, interviewer-assigned codes, and email-based logins. Connecting a single scholar's journey from application through feedback requires building a manual crosswalk table—error-prone work that delays reporting by weeks.

In a centralized platform, one contact ID connects all three touchpoints automatically. A program officer can view any scholar's complete journey in a single unified record without building crosswalks or writing queries.

Example 3: Domain Integrity — Valid Data at the Point of Entry

A health services organization collects patient satisfaction surveys. Without validation rules, 12% of date-of-birth fields contain impossible values (years like "1850" or "2030"), 8% of required fields are left blank, and Likert scale responses show suspicious patterns suggesting inattentive respondents.

Platforms with built-in validation enforce domain integrity during collection: date fields accept only valid ranges, required fields must be completed before submission, and attention-check questions flag potential low-quality responses for review. Data arrives clean rather than requiring cleanup.

Example 4: Temporal Integrity — Accurate Change Measurement Over Time

A job training program measures confidence scores at baseline (week 1), midpoint (week 6), and completion (week 12). With isolated survey tools, the analyst must manually match 200 participants across three separate spreadsheets. Matching errors introduce noise: a 3-point confidence increase for one participant might actually belong to a different person with a similar name.

Persistent contact IDs make temporal integrity automatic. Each participant's week-1, week-6, and week-12 scores exist under the same record. Change calculations are mathematically precise because the data is structurally guaranteed to belong to the correct individual.

These examples demonstrate a consistent principle: data integrity in survey collection is determined primarily by architectural decisions made before the first question is written, not by cleanup procedures applied after data is collected.

Several platform categories exist for survey data collection, and they differ dramatically in what "real-time" actually means.

Traditional survey tools (SurveyMonkey, Google Forms, Typeform) update dashboards as responses arrive. You can watch response counts, basic charts, and completion rates in real time. However, these platforms treat each survey as an isolated dataset with no cross-survey integration, no persistent participant identity, and limited qualitative analysis. "Real-time" means fast data display, not fast insight delivery.

Enterprise research platforms (Qualtrics, Medallia) offer advanced real-time dashboards, sophisticated panel management, and text analytics modules. These tools handle complex research designs, multi-country deployments, and detailed experimental controls. However, implementation typically requires 3-12 months, dedicated data teams, and significant investment. Text analytics features exist as add-on modules requiring separate configuration and expertise.

AI-native platforms (Sopact Sense) architect the entire system around centralized identity management and continuous AI processing. Survey responses, open-ended text, uploaded documents, and interview transcripts all connect to unified participant records and get analyzed as they arrive. Qualitative and quantitative data process together—no separate tools, no export-import cycles, no manual coding.

Mid-tier and specialized tools (Alchemer, SurveyCTO, SmartSurvey) occupy various niches. Alchemer offers good validation and customizable dashboards but requires API work for cross-survey integration. SurveyCTO excels at field data collection with offline capability. SmartSurvey provides GDPR-compliant collection with auto-categorization of text responses.

The critical evaluation criterion isn't which platform has the most features—it's what happens in the time between "data collected" and "insight delivered."

The comparison table and individual platform profiles below break down these differences across the dimensions that actually determine time-to-insight.

Selecting the right survey platform requires evaluating capabilities that most comparison guides ignore. Feature lists emphasize question types, skip logic, and design templates—the parts of survey work that take 20% of total effort. The following criteria focus on the 80% that determines whether insights arrive in time to matter.

Criterion 1: How does the platform manage participant identity?

This is the single most consequential architectural decision. Ask: does each participant get a persistent identifier that follows them across every form they complete? Or does each survey create independent records that require manual matching? If the platform doesn't maintain participant identity natively, every other "real-time" feature is undermined by the weeks of reconciliation work required before analysis begins.

Criterion 2: What happens to open-ended responses?

Every platform handles quantitative data well—averages, charts, and cross-tabulations update automatically. The differentiator is qualitative processing. When 200 participants answer "What was your biggest challenge?", does the platform show raw text requiring manual reading, offer basic word clouds, require a separate text analytics module, or extract themes, sentiment, and rubric scores automatically as responses arrive? Qualitative analysis is where most programs stall.

Criterion 3: Can you connect data across multiple surveys without technical work?

Test this specifically: if you create an intake survey and an exit survey, can you view a single participant's responses from both in one place without exporting, merging, or writing code? If the answer involves "export to CSV" or "use our API," the platform treats cross-survey analysis as an afterthought.

Criterion 4: What does implementation actually require?

A platform that takes 6 months to implement and requires consultants is not delivering "real-time" anything for the first half-year. Ask for realistic implementation timelines, including who needs to be involved and what technical resources are required. Self-service platforms where program teams operate independently should be strongly preferred unless your use case genuinely requires enterprise-grade experimental design.

Criterion 5: Can the platform process documents and interviews alongside survey data?

Participant feedback doesn't live exclusively in survey responses. Programs collect PDFs, interview transcripts, partner reports, and application narratives. If these require separate tools with separate analysis workflows, you're building fragmentation into your process.

Most modern survey platforms advertise CRM integration, but the depth varies enormously—and the differences determine whether "integration" saves time or creates new problems.

Level 1: Basic notification. The survey tool notifies the CRM that a response was received. You might see a contact activity record showing "Completed satisfaction survey" without the actual response data.

Level 2: Field-level sync. Individual response fields map to CRM contact properties. When someone submits a rating of 8/10, the CRM field updates automatically. This works for simple quantitative data but breaks down with multi-question surveys or repeated surveys (fields get overwritten).

Level 3: Record-level integration with history. Each survey response creates a linked record in the CRM while preserving the full dataset and maintaining history across multiple submissions.

Level 4: Bidirectional identity management. The survey platform and CRM share participant identity natively. Contact records are the same object, or linked through a persistent identifier enabling seamless data flow in both directions. This eliminates middleware dependencies and ensures survey insights and CRM data inform each other in real time.

The practical question: when a participant completes their third survey, can a program manager see all three responses alongside their CRM history in a single view without switching tools? If yes, the integration is genuinely useful. If not, it's adding complexity without reducing the analysis bottleneck.

Platforms that include built-in contact management alongside survey functionality often deliver the smoothest experience because identity management doesn't depend on middleware reliability or API rate limits.

Most survey tools treat each data collection event as an isolated snapshot—useful for understanding a moment, but inadequate for measuring change. Continuous tracking requires a fundamentally different architecture.

What continuous tracking means in practice: A job training program needs to know whether participants' confidence, skill levels, and employment outcomes improve over a 12-month period. This requires collecting data at intake (month 0), mid-program (month 3), completion (month 6), and follow-up (month 12). Four survey waves, same participants, same dimensions across time.

Why traditional tools fail: With isolated survey tools, each wave creates a separate dataset. Connecting participant A's month-0 responses to their month-12 outcomes requires manual matching—typically by name or email, both of which change. By wave three, matching errors accumulate. The resulting report shows aggregate averages ("confidence increased from 3.2 to 4.1") but cannot reliably demonstrate individual transformation.

What continuous tracking requires: Persistent unique identifiers assigned at first contact that follow each participant across every interaction. A contact-first database where survey responses accumulate under participant records rather than survey records. Automated analysis that processes new data as it arrives and compares it to historical baselines.

With this architecture, program staff see each participant's complete trajectory in a single view. Change scores are mathematically precise because the system guarantees data belongs to the correct individual. Staff can identify participants falling behind at month 3—while there's still time to provide additional support—rather than discovering this in a month-12 retrospective report.

The shift from point-in-time surveys to continuous tracking transforms data collection from a documentation exercise into a management tool. Programs improve in real time rather than in hindsight.