Accelerator Application — From Static Intake to Lifecycle Impact

For years, accelerator application platforms have been sold as bundles of features—portals, dashboards, reminders, payment workflows. Helpful, sure. But the real problem runs deeper. Two realities are now unavoidable: AI collapses manual review time and improves consistency, yet most systems were built for a pre-AI world (or bolt on gimmicks that don’t scale or cut design-to-dashboard time by 30×). And AI is only as good as your data: if inputs are messy, no model saves you. What you need is clean, structured data from day one and a system that learns with you through plain language, not endless configuration.

The question isn’t “can we process 1,000 applications?” It’s: can we do it faster, fairer, and with proof of long-term outcomes? Old models shave a little admin time but still bury reviewers in hundreds of hours, leave bias unchecked, and trap evidence in PDFs and spreadsheets. The result: months-long cycles, shaky fairness, and thin impact reporting. The fix flips the ROI equation: use structured rubrics + AI-assisted analysis to cut reviewer hours 60–75%; benchmark cohorts in real time to surface equity issues before decisions are final; replace static exports with longitudinal evidence that shows what happened after selection.

“Most accelerator platforms still treat applications and demo days as the finish line. But the real measure of success is tracking founder journeys—from intake to mentoring to fundraising outcomes. I’ve seen too many accelerators drown in forms, spreadsheets, and static reports. The future is clean-at-source data, centralized across the lifecycle, with AI surfacing insights in minutes. That’s how accelerators prove impact to funders, investors, and founders themselves.” — Unmesh Sheth, Founder & CEO, Sopact

And this isn’t just scholarships. The same shift applies to CSR grants, research funding, and accelerator applications. Intake and selection are only the beginning. If you stop there, you miss the most important question: what happened after selection?

Definition & Why Now

An accelerator application system should do more than qualify applicants. It should create a durable journey record that connects application evidence, reviewer notes, mentoring touchpoints, customer interviews, and investor signals to outcomes like pilots, revenue, and funding. In 2025, accelerators win by proving learning velocity and risk reduction—not by shipping the most forms. That requires clean-at-source IDs, centralized data, and AI that reads long text and attachments while preserving a traceable evidence trail.

Primary Thesis: Application → Mentoring → Outcomes

After demo day, data often splinters across inboxes and drives. The story of value creation gets thinner the further you move from the application. Flip the architecture: capture structured data at intake, maintain identity continuity across stages, and let AI summarize and link narratives to quantitative progress. The payoff is faster, fairer selection up front—and continuous, defensible outcomes you can show to funders, investors, and founders.

What’s Broken in Accelerator Application Workflows

Static intake, static insight

Long forms produce surface signals. Without lifecycle tracking, you can’t see if mentoring or customer work changed the trajectory.

Siloed evidence

Reviewer notes, interviews, and investor feedback live in different tools. Joins break; timelines drift; decisions lean on anecdotes.

Untraceable reasoning

Slides appear at the end with no evidence trail. Funders and investors can’t audit how you scored or why you selected a company.

Blueprint: From Static Forms to Continuous Feedback

Use this seven-step blueprint to turn your accelerator application into a lifecycle intelligence system.

1) Define decisions and outcomes

- Action rule: “If reviewer notes cite ‘problem clarity’ gaps in >25% of applications, require a ‘customer proof’ artifact.”

- Pick 1–2 cohort outcomes (e.g., pilots signed, revenue milestones, raise within 6–12 months).

2) Collect clean at the source

- Attach founder_id, company_id, cohort_id to every submission; auto-timestamp; store stage labels (apply, interview, mentor, demo, post-demo).

- Require proof fields (links or uploads) wherever claims are made.

3) Read long text with a small codebook

- Create 6–10 parent themes (e.g., Problem Clarity, Evidence of Demand, Team–Problem Fit, Moat, Ethical/Regulatory Risk).

- Give each one a one-sentence definition and a sample quote; version each cohort.

4) First-pass AI + human review

- AI drafts clusters and summaries; humans review a 10–15% sample; merge/split themes; pin exact quotes as evidence.

5) Compare by segment and time

- Slice by sector, geography, founder background, and reviewer to spot bias and opportunity.

- Align windows (pre-interview vs. post-interview) for fair comparisons.

6) Link evidence to outcomes

- Relate early themes to downstream outcomes (pilots, retention, raises). Start with descriptive checks before modeling.

- Call one change to test now and one risk to monitor.

7) Close the loop and version

- Share transparent selection notes where appropriate; record corrective actions in a decisions log.

- Version instruments, codebooks, and reviewer rubrics each cohort.

Integrating Qual + Quant in Accelerator Programs

Blending qualitative narratives with quantitative progress makes accelerator decisions faster and fairer. Keep joins simple and traceable.

- Join keys: founder_id, company_id, cohort_id; never rely on email alone.

- Time: compare like-for-like stage windows; document filters.

- Analysis: start with theme frequency by segment; add simple correlations to outcomes once definitions stabilize.

Reliability & Auditability

- Inter-rater sampling: double-code 10–15% of notes; resolve disagreements; update definitions.

- Version control: stamp instruments, rubrics, and codebooks; keep a short changelog.

- Reproducibility: store prompts/settings for any AI assist; keep example quotes under each theme with source links.

- Bias watch: track response and selection rates by segment; record mitigation steps.

“Transparent methods beat clever models when trust is on the line.” — Evaluation practice note

5 Must-Haves for Accelerator Software

Regular listicle layout (no accordion). Each item maps to the accelerator application lifecycle.

1) Clean-at-Source Applications

Validate on entry—unique IDs, no duplicates, required artifacts—so downstream joins never break.

2) Founder Lifecycle Tracking

One journey record from intake → interview → mentoring → demo → post-demo outcomes.

3) Mentor & Investor Feedback Loops

Structured + open feedback captured at every stage to surface momentum and risk quickly.

4) Mixed-Method Analysis

Link qualitative themes from applications and notes to quantitative progress and milestones.

5) AI Reading & Reasoning

Scale review of long text and attachments; draft summaries; highlight contradictions and gaps.

Case Examples

Example A — Accelerator Selection & Onboarding

When: After submit, post-interview, week-1 onboarding Audience: Applicants & new cohort Core metric: Application completion & acceptance rate Top “why”: Pace and unclear value proposition Action loop: Publish rubric, resequence onboarding

- Instrument (exact wording): “Describe your most recent user conversation and what changed,” “Link one proof artifact,” “What step did you just complete?”

- IDs: pass founder_id, company_id, cohort_id, stage, timestamp.

- 15–20 min analysis: AI clusters themes; reviewer checks 10–15%; finalize 4–6 parent themes; compare by stage and geography; join to completion/acceptance.

- If pattern X appears: “Value clarity” gaps post-onboarding → add early context section; share explainer; record change in decisions log.

- Iterate: Keep wording stable; version codebook v1.0 → v1.1; track acceptance lift next cohort.

Example B — Workforce Cohort Mid-Program Pulse

When: Week 4 Audience: Learners Core metric: Course completion Top “why”: Unclear practice steps Action loop: Micro-lesson + checklist

- Instrument (exact wording): “Where did you hesitate—name the exact step,” “What would remove that hesitation next week?”

- IDs: pass participant_id, cohort_id, instructor_id, timestamp.

- 15–20 min analysis: Auto-cluster → human merge/split → codes: Clarity, Practice Time, Tool Access, Peer Support → compare by instructor → join to completion trend.

- If pattern X appears: “Clarity” spikes in one section → 10-minute demo + checklist; communicate change to close loop.

- Iterate: Keep prompts stable; add one Likert on “lab clarity”; version codebook; track lift next week.

30-Day Cadence

- Week 1: Collect at milestones; verify IDs/timestamps; quick data quality check.

- Week 2: First-pass coding; inter-rater sample; update codebook with examples.

- Week 3: Join to outcomes; call one change and one risk; implement micro-tests.

- Week 4: Communicate changes; archive versions; prep next cycle and stakeholder readout.

“Continuous, not colossal.” — Change management reminder

Optional: How a Tool Helps (Plain Language)

What a modern accelerator application platform should do:

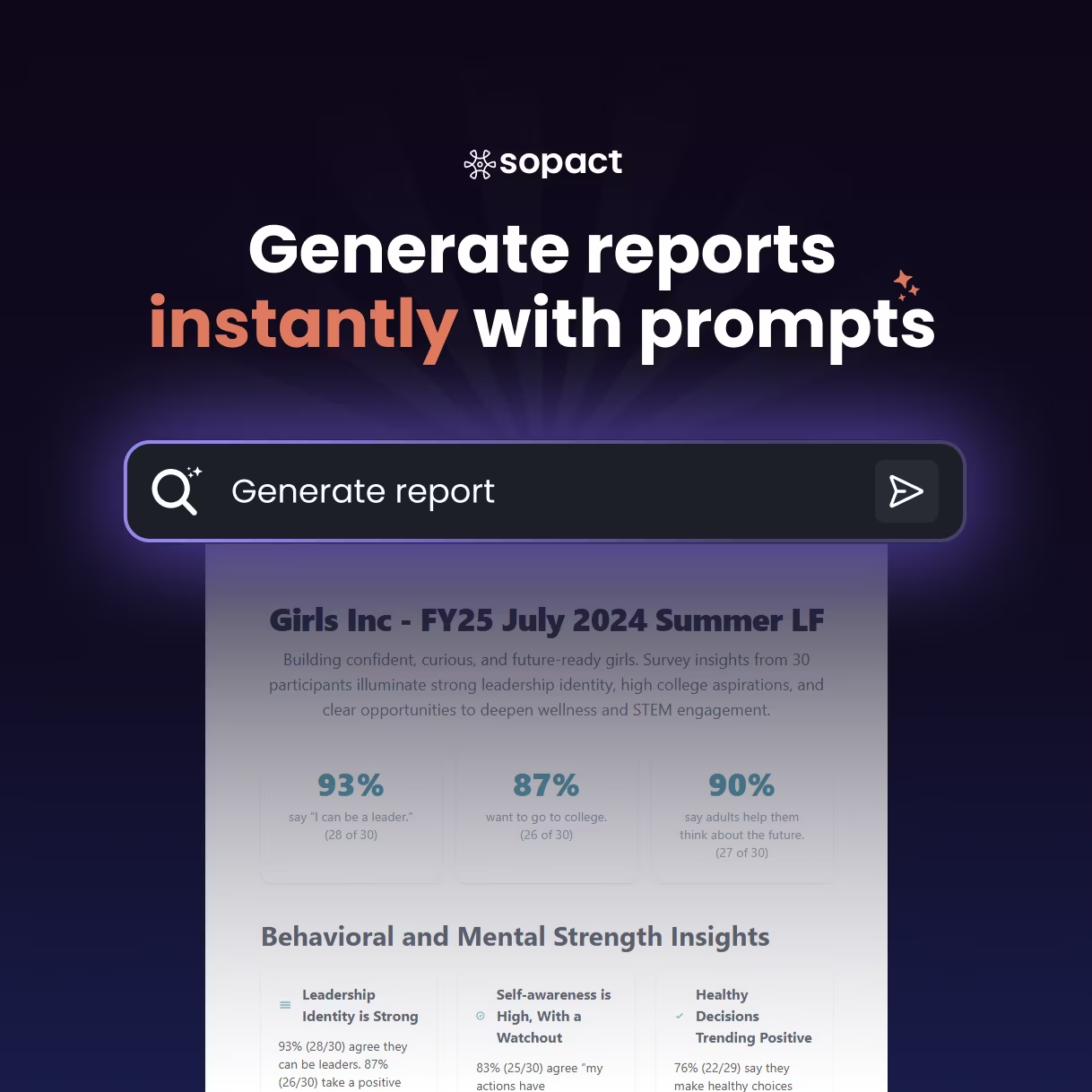

- Speed: first-pass grouping and summaries in minutes (cut reviewer hours 60–75%).

- Reliability: automatic IDs, timestamps, and versioning across stages.

- Auto-grouping: draft themes you can accept, rename, or merge—quotes pinned for evidence.

- Clean IDs: guardrails prevent duplicate founders/companies and broken joins.

- Live view: segment shifts as new data arrives; cohort comparisons on demand.

FAQ

How should we redesign our accelerator application for lifecycle tracking?

Keep eligibility checks but add fields that create an evidence trail: customer proof links, mentor touchpoints, and stage labels. Pass stable IDs (founder_id, company_id, cohort_id) and timestamps automatically. Require one concise narrative prompt tied to your rubric, such as problem clarity or user evidence. Standardize uploads so later cohorts remain comparable. Document all changes in a short changelog so reviewers and auditors understand version differences. The goal is traceable data you can join to downstream outcomes.

What’s the simplest way to make qualitative reviews consistent?

Start with a small codebook of 6–10 parent themes tied to your rubric. Define each theme in one sentence and include one example quote. Double-code a 10–15% sample each cycle to calibrate reviewers, then record disagreements and resolve definitions. Keep the codebook versioned so you can compare cohorts fairly. Save AI prompts or parameters if you use assistance and store them with the cycle’s artifacts. Consistency beats complexity when decisions must be defended.

How do we link application evidence to outcomes without heavy modeling?

Use stable IDs and stage windows to join early themes with later signals like pilots, retention, or raises. Begin with descriptive checks: theme frequency by segment, simple correlations to outcomes, and before/after comparisons around changes. Record filters and time ranges so results are reproducible. Only layer on modeling after two stable cycles of data and definitions. Keep models interpretable and tie findings back to representative quotes. Transparency wins in reviews with funders and partners.

How can we reduce bias in selection and mentoring?

Segment by geography, sector, and founder background; track response and selection rates to spot gaps. Run inter-rater checks on narrative coding and reviewer scoring. Use standardized prompts and rubrics, then audit a sample of decisions with the underlying evidence. Publish “what changed” notes to show accountability. If disparities persist, adjust outreach, mentor matching, or the instrument itself. Bias detection must be systematic, not anecdotal.

What should we keep for auditability across cohorts and years?

Versioned instruments, rubrics, and codebooks; an evidence vault with linked files; a decisions log; and a simple readme describing joins and filters. Store timestamps and stage tags for each record. Keep role-based access to protect sensitive content while allowing reviewers to trace reasoning. Archive data at cycle end to preserve comparability. Make it easy for a new analyst—or a funder—to reproduce your conclusions step by step.

How do we avoid “slideware” and show real accelerator outcomes?

Replace end-of-cohort slide sprints with live cohort reports that update as evidence arrives. Tie each metric or claim to a source document or quote. Show changes triggered by feedback and track their effect on measurable outcomes. Keep comparisons stage-aligned and versioned. Share concise, role-based dashboards: founders see progress, mentors see touchpoints, leaders see portfolio outcomes. Evidence beats aesthetics every time.